SAN File Systems

The idea of managing very large file systems has certain implications. For example

- a single point of failure (if the part of the file systems goes corrupt, does the entire file systems go off line?)

- fsck may take an excessive amount of time (one reference i found was 1 hour/TB for a clean file system, other references we've seen is days for 1 TB in reference to a mail spool)

| NOTE: once we receive our disks, before going online, we need to test this. Build this table out. |

|---|

| size | files | time |

|---|---|---|

| 264 GB | 28,000 (50% 1MB & 50% 100MB) | 15 mins |

| 528 GB | 56,000 (50% 1MB & 50% 100MB) | 24 mins |

| 792 GB | 84,000 (50% 1MB & 50% 100MB) | 26 mins |

| 985 GB | 84,200 (50% 1MB & 50% 100MB) | 32 mins |

| size | files | time |

| 250 GB | 62,500,000 files in 6,250 dirs (all 4Kb files, 10,000 per dir) | 25 min |

| 500 GB | 125,000,000 files in 12,500 dirs (all 4Kb files, 10,000 per dir) | 30 min |

on clean file system with fsck -y -f |

||

| 280 GB | 8,400,000 in 41,138 dirs (copy of mail spool) | 2 hrs and 40 min |

A better idea might be to split up the file systems. For purposes of our discussion lets assume a corrupt file system of 1 TB would result 2-3 hours of fsck (file repair and checking), and we're willing to assume such down times. We then create 2 volumes on our SAN.

- [top] SAN scratch volume, /vol/cluster_scratch is created with space guarantee = none, no snapshots, autogrow, and size = 1 TB.

- [bot] user home volume, /vol/cluster_home is created with space guarantee = none, 1 weekly snapshot, autogrow, and size = 10 TB.

LUNs in both volumes are created with setting 'no space reserved'. This combination allows us thin provisioning. When the volumes and LUNs are created the SAN does not reserved all the space requested. The autogrow feature allocates disk space when needed, until that LUN size is reached.

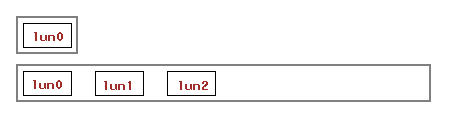

In the user volume, we can export from the io node, the LUN labeled /vol/cluster_home/lun0 as /home/users. This file system would hold students, staff and others whom we do not expect to use a lot of disk space. For example, we can set an expected usage limit of 20 GB per user (100 users if all used their quota). If more space is needed for a user, the administrator will need to contacted.

Other users, like for example fstarr and dbeveridge, could occupy (respectively) /vol/cluster_home/lun1 and /vol/cluster_home/lun2, each with a size of 1 TB. Those file systems are then NFS exported as /home/fstarr and /home/dbeveridge. This file space is not used until claimed by actual content. In the case of graduates students that need to collaborate with their faculty, the home directory for those students can be placed inside those faculty home directories.

In this manner, the largest exposure to a corrupt file system is by LUN. The down time would be proportional to the amount of disk space used and the time required to run fsck and other restore operations.

However, some monitoring program would have to be written. By creating lots of “thin provisioned” LUNs we are basically over-committing the amount of SAN disk space. But it also levels the playing field in terms of how much disk space each user could use. Users within the “users” LUN are limited to 20 GB (or whatever limit we set), users that have their “own” LUN are limited to 1 TB. And all users together are limited to 10 TB … actually 9 TB if the 1 TB scratch LUN is taken out.

| It should be noted that the available space in a LUN is a “high water mark”. If for example 75% is used, and some large files are deleted, the available space remains the same. This is becuase the file system is actually not on the filer but on the io node (fiber channel). However, new files that are being written in the users' home directory can be written to the freshly deleted disk space. Sort of a back filling the available space. |

|---|

—-