Table of Contents

The information displayed here will undoubtedly change very quickly.

So your mileage and output may be different.

⇒ Platform/OCS's very good Running Jobs with Platform Lava (read it).

⇒ In all the examples below, man command will provide you with detailed information, like for example man bqueues.

Class Queues

As of 09/23/2008, there are 2 class queues targeted at classroom usage of the cluster. In order to use these queues you must have a class account, these are in the form of hpc100-hpc199. Instructors can request these accounts for classes that will the use the cluster.

Once you have a class account you may use either one of these queues. The 'iclass' queue submits jobs to the Infiniband enabled nodes, while the 'eclass' queue submits jobs to the gigabit ethernet enabled nodes of the 'elw', 'emw' and 'ehw' queues. You can target individual hosts within the 'iclass' or 'eclass' queues with the -m flag of 'bsub'.

In order to get class assignments through the scheduler, these 2 queues have a high priority in job scheduling. In addition these queues have been configured with the scheduling policy of PREEMPTIVE. What this means is that *if* jobs reach a state of PENDING, the scheduler will suspend currently running jobs on the least busy node in the 'eclass' or 'iclass' queue and submit the jobs of the class accounts. Jobs in the SSUSP (system suspended) state indicate this activity. Those jobs will continue to run as soon as the high priority jobs finish.

The class accounts hpc100-hpc199 are recycled each semester so please save any data and programs in other locations.

<hi #ffff00>The rest of this page is out of date but may be helpful to read.

Queues are now listed on the “Tools Page” at http://petaltail.wesleyan.edu</hi>

General Queues

I'm leaving the old info below for historical purposes.

— Meij, Henk 2007/11/19 10:10

| Queue | Description | Priority | Nr Of Nodes | Total Mem Per Node | Total Cores In Queue | Switch | Hosts | Notes |

|---|---|---|---|---|---|---|---|---|

| elw | light weight nodes | 50 | 08 | 04 | 64 | gigabit ethernet | compute-1-17 thru compute-1-24 | |

| emw | medium weight nodes | 50 | 04 | 08 | 32 | gigabit ethernet | compute-1-25 thru compute-1-27 and compute-2-28 | |

| ehw | heavy weight nodes | 50 | 04 | 16 | 32 | gigabit ethernet | compute-2-29 thru compute-2-32 | |

| ehwfd | heavy weight nodes | 50 | 04 | 16 | 32 | gigabit ethernet | nfs-2-1 thru nfs-2-4 | fast local disks |

| imw | medium weight nodes | 50 | 16 | 08 | 128 | infiniband | compute-1-1 thru compute-1-16 | |

| matlab | any node | 50 | na | na | na | either | any host | max jobs 'per user' or 'per host' is 8 |

The following queues have been removed.

debug/idebug; nobody used them.lwnodes/ilwnodes; reorganized, see above.idle; allowed simple jobs to get scheduled on hosts with lots of resources.gaussian; was restricted toehwfdhosts and that's unnecessary.molscat; deprecated, usebusb -xorbsub -Rto reserve resources.

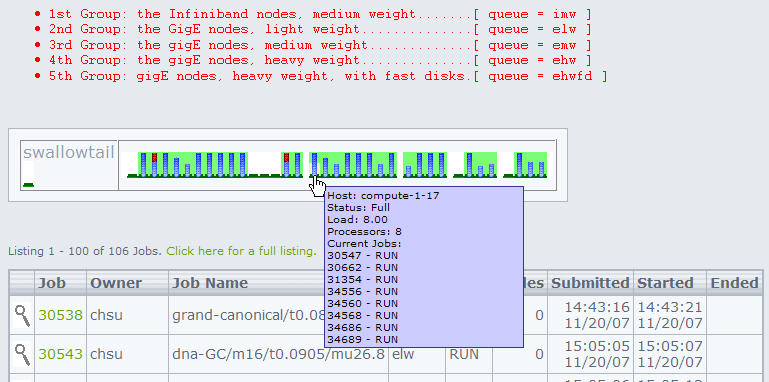

When CluMon works properly it should show a gaphical display like

The commands bqueues -l queue_name will reveal the node members of that queue. Then command bhosts | grep -v closed will reveal the nodes that can still accept jobs (8 minus NJOBS equals the available jobslots).

old queue info

| Legend |

|---|

| lw ⇒ light weight nodes |

| hw ⇒ heavy weight nodes |

| i ⇒ as prefix means infiniband nodes |

[hmeij@swallowtail ~]$ bqueues QUEUE_NAME PRIO STATUS MAX JL/U JL/P JL/H NJOBS PEND RUN SUSP debug 35 Open:Active - - - - 0 0 0 0 idebug 35 Open:Active - - - - 0 0 0 0 16-lwnodes 30 Open:Active - - - - 0 0 0 0 16-ilwnodes 30 Open:Active - - - - 0 0 0 0 04-hwnodes 30 Open:Active - - - - 0 0 0 0 idle 20 Open:Active 8 8 - - 0 0 0 0

bqueues summarizes the queues: listing status (may be closed), the relative priority of the queue as well as the maximum number of jobs allowed, the maximum number of jobs per user allowed and the amount of jobs currently in the queue. A '-' means there are no limits.

So for example, the idle queue is the only queue with a limit of 8 job slots for this queue and each user may only have 1 job in the queue. There are no other limits, such as cpu time, load thresholds when to stop accepting jobs for a particular queue, etc. There are a variety of queue settings that can be applied. If you wish to read about them here is the Lava Admin Manual.

bqueues -l will give a detailed listing, here is a snippet:

QUEUE: debug -- Debug queue for hosts login1 & login2 (ethernet). Scheduled with relatively high priority. QUEUE: idebug -- Debug queue for hosts ilogin1 & ilogin2 (infiniband). Scheduled with relatively high priority. QUEUE: 16-lwnodes -- For normal jobs, light weight nodes. QUEUE: 16-ilwnodes -- For normal jobs, light weight nodes on infiniband QUEUE: 04-hwnodes -- For large memory jobs, heavy weight nodes with 16 gb memory each. /localscratch is dedicated fast disks of about 230 gb for each node QUEUE: idle -- Running only if the machine is idle and very lightly loaded. Max cores=8, Max jobs/user=1. Any node. This is the default queue.

Other information that is listed in this output is which hosts are part of the queue and which users may submit to these queues. Queues idebug and debug correspond to the back end hosts users may log into, respectively ilogin1/ilogin2 and login1/login2.

The Hosts

Information about individual hosts can be obtained with the bhosts command.

[hmeij@swallowtail ~]$ bhosts HOST_NAME STATUS JL/U MAX NJOBS RUN SSUSP USUSP RSV compute-1-1 ok - 8 0 0 0 0 0 compute-1-10 ok - 8 0 0 0 0 0 compute-1-11 ok - 8 0 0 0 0 0 compute-1-12 ok - 8 0 0 0 0 0 compute-1-13 ok - 8 0 0 0 0 0 compute-1-14 ok - 8 0 0 0 0 0 compute-1-15 ok - 8 0 0 0 0 0 compute-1-16 ok - 8 0 0 0 0 0 compute-1-17 ok - 8 0 0 0 0 0 compute-1-18 ok - 8 0 0 0 0 0 compute-1-19 ok - 8 0 0 0 0 0 compute-1-2 ok - 8 0 0 0 0 0 compute-1-20 ok - 8 0 0 0 0 0 compute-1-21 ok - 8 0 0 0 0 0 compute-1-22 ok - 8 0 0 0 0 0 compute-1-23 ok - 8 0 0 0 0 0 compute-1-24 ok - 8 0 0 0 0 0 compute-1-25 ok - 8 0 0 0 0 0 compute-1-26 ok - 8 0 0 0 0 0 compute-1-27 ok - 8 0 0 0 0 0 compute-1-3 ok - 8 0 0 0 0 0 compute-1-4 ok - 8 0 0 0 0 0 compute-1-5 ok - 8 0 0 0 0 0 compute-1-6 ok - 8 0 0 0 0 0 compute-1-7 ok - 8 0 0 0 0 0 compute-1-8 ok - 8 0 0 0 0 0 compute-1-9 ok - 8 0 0 0 0 0 compute-2-28 ok - 8 0 0 0 0 0 compute-2-29 ok - 8 0 0 0 0 0 compute-2-30 ok - 8 0 0 0 0 0 compute-2-31 ok - 8 0 0 0 0 0 compute-2-32 ok - 8 0 0 0 0 0 ionode-1 closed - 0 0 0 0 0 0 nfs-2-1 ok - 8 0 0 0 0 0 nfs-2-2 ok - 8 0 0 0 0 0 nfs-2-3 ok - 8 0 0 0 0 0 nfs-2-4 unavail - 1 0 0 0 0 0 swallowtail closed - 0 0 0 0 0 0

The head node swallowtail and the NFS server will not be part of our compute node hosts unless necessary. In the above listing one of the heavy weight nodes, nfs-2-4 is “unavail” because it is connected to the MD1000 were we just had a disk failure. So, bqueues will show hosts as members of queues, but bhosts will also inform you if the host is actually up and running.

PRE_EXEC and POST_EXEC

It is important you understand the use of the filesystems.

Read the Filesystem page.

Every queue has defined a script to execute before the job is submitted and a script to execute after the job is finished. If these scripts do not exit with a status of 0, your job is moved to the PENDing status. Not your problem, just so you know. Notify the HPCadmin.

PRE_EXEC creates two directories for the job, owned by the job submitter (UID/GID).

- /sanscratch/$LSB_JOBID

- /localscratch/$LSB_JOBID

Inside these directories you can create your own directory structure if needed. This avoids any problems if multiple jobs are submitted by the users.

You could also have multiple copies of your program running on the same host submitted with a single job. I'd suggest using the process id of the shell the program itself runs under ($$ in bash shell syntax) to create a subdirectory structure inside these job-level scratch directories. Each program invocation then avoid conflicts in writes.

If each program needs to read let's say the same datafile, then create for example a shared directory in your home directory. Data that needs to be shared by multiple users and/or groups can be staged in a variety of ways. Contact the HPCadmin.

⇒ POST_EXEC removes the two scratch directories for the job.

Copy your results to your home directory before the job finishes in your scripts. Try to avoid doing frequent writes to results files in your home directory as this will tax both our head node and io node.

Sample scripts on how to manage your directories are provided in the job submission pages.