Table of Contents

A parallel code example pulled from the BCCD project to probe around the notion of what is parallel computing?

⇒ This is page 1 of 3, navigation provided at bottom of page

GalaxSee: N-Body Physics

Default Behavior

The problem is described Here.

The Shodor web version of Galaxsee External Link

The program GalaxSee is located in /share/apps/Gal and was compiled against OpemMpi-1.2, the “intel” version. When this OpemMPI version waas compiled it was linked against the Infiniband libraries too. But for now we'll stick to running Galaxsee parallel code across the ethernet GigE switch.

It was compiled with /share/apps/openmpi-1.2_intel/bin/mpicc. So we'll use that in our invocation. This compiler will set up our “server” and “workers” in order to process the code.

To get sense for what it does, lets run it interactively with a display on the head node. No parameters are defined so we'll run with default values (1000 100 1000 for number of planet bodies, mass of bodies and run time):

[hmeij@swalloowtail ~]$ /share/apps/Gal/GalaxSee

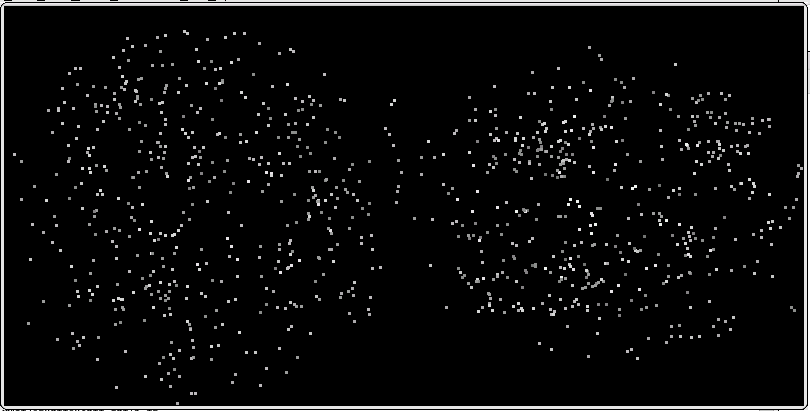

A screen popups with slowly rotating bodies of mass, which looks like this:

Now lets add some bodies, some mass and run time parameters:

[hmeij@swalloowtail ~]$ /share/apps/Gal/GalaxSee 2500 400 5000

That seems to tax the localhost as the frames start to update erratically. These invocations just execute the code, no parallel processing involved.

Parallel:Localhost

Submitting a job in parallel needs a helper script mpirun … several parameters are passed such as the number of “processors” (-np, read cores) we want to use and a reference to a file (-machinefile) specifying on which hosts to find these cores. For example, lets use 2 cores on the head node … (note: the machinefile lists 8 individual lines because the head node has two dual quad-core processors thus 8 cores are available). Rather than use the actual hostname we 'localhost' so we can reuse the file later.

/share/apps/openmpi-1.2/bin/mpirun -np 2 -machinefile /share/apps/Gal/machines /share/apps/Gal/GalaxSee 2500 400 5000

Parallel speed up in action! Next, invoke more cores, go from 2 to 4 then 8. The frames are back to running smoothly. What this means is when running this program in parallel mode the calculations can be done faster and therefore the frames render smoothly.

Parallel:Code

So how is that done?

| The GalaxSee code is a simple implementation of parallelism. Since most of the time in a given N-Body model is spent calculating the forces, we only parallelize that part of the code. “Client” programs that just calculate accelerations are fed every particle’s information, and a list of which particles that client should compute. A “server” runs the main program, and sends out requests and collects results during the force calculation. |

And what does that look like?

[root@swallowtail Gal]# grep MPI_ *cpp Gal.cpp: MPI_Init(argc,argv); derivs_client.cpp: MPI_COMM_WORLD,&g_mpi.status); derivs.cpp: MPIDATA_PASSMASS,MPI_COMM_WORLD,&mass_request[i]); derivs.cpp: MPIDATA_PASSSHIELD,MPI_COMM_WORLD,&srad_request[i]); derivs.cpp: MPI_Isend(0,0,MPI_INT,i,MPIDATA_DODERIVS,... derivs.cpp: MPI_Recv(retval,number_per_cpu[i]*6,... derivs.cpp: MPI_Request * gnorm_request = new MPI_Request[g_mpi.size]; derivs.cpp: MPI_Wait(&gnorm_request[i], &g_mpi.status ); Gal.cpp: MPI_Finalize();

MPI_Init sets up the “workers” and “server”. MPI_Finalize destroys them all. Other MPI calls do different things: send info, receive info, send info & wait, request info & wait … etc. For a list of MPI calls go to this External Link.

Parallel:Remote Hosts

So lets run that again on a compute nodes. We add one option to the end of the command ('0') meaning generate no graphic output, just do the calculations. Lets record “wall time”.

- the machinefile contains 8 lines each with entry localhost

- we'll run the parallel code over the GigE switch

- we'll invoke OpenMPI

time /share/apps/openmpi-1.2/bin/mpirun

-np 2

-machinefile /share/apps/Gal/machines-headnode

/share/apps/Gal/GalaxSee 5000 400 5000 0

Some results …

| Run On Single Idle Dual Quad Node (n2-2) | |

|---|---|

| GigE Node via OpenMPI | |

| -np | time |

| 02 | 9m36s |

| 04 | 4m49s |

| 08 | 2m26s |

… we observe that our program runs faster and faster with more cores. But remember that all these “workers” still run on the same host. So next, lets run via the scheduler and ask for more cores so that multiple hosts are involved solving the problem.

At this point, we invoke the mpi wrapper scripts as detailed in the Parallel Job Submissions page. The scheduler will now build the machinefile on the fly as we'll never know where our jobs will end up. It could be on any of the nodes, each with dual quad processors … same Gal parameters as above.

- let us try the 04-hwnodes queue, all 4 hosts are idle right now

- so max number of cores we can ask for is 4*2*4=32 (-np)

- -nh below refers to the hosts involved solving the problem

| Run On 04-hwnodes Queue, All HW Nodes | ||

|---|---|---|

| OpenMPI over GigE Switch | ||

| -np | -nh | time |

| 04 | 01 | 4m45s |

| 08 | 01 | 2m27s |

| 12 | 02 | 1m42s |

| 16 | 02 | 1m21s |

| 32 | 04 | 0m57s |

Now we observe that our improvements in process time flatten out if we ask for more than 12 cores. The logic behind this is that the time required to pass the messages between workers and server now becomes a burden. If we switch to the idle queue, we could ask for a mass of cores and see no improvement at all.

| Run On idle Queue, Mix of Nodes | ||

|---|---|---|

| OpenMPI over GigE Switch | ||

| -np | -nh | time |

| 08 | 02 | 2m28s |

| 16 | 03 | 1m23s |

| 32 | 06 | 1m20s |

| 64 | 12 | 1m26s |

| 80 | 14 | 1m16s |

| 96 | 15 | 1m26s |

Moral of story: the more cores are requested, the higher the probability that your job will will remain in pending state until enough cores are available for processing. In order to get the job done, it might be better to focus on an acceptable turn around time for the job to complete.

⇒ go to page 2/3 of what is parallel computing ?