Linpack

Grabbed the Linpack source and compiled against /opt/openmpi/1.4.2 … using the Make.Linux_PII_CBLAS makefile. Had to grab the atlas libraries from another host. We changed $HOME and pointed to libmpi.so ($MPdir and $MPlib) and repointed $LAdir. Then it compiled fine.

More about Linpack on wikipedia

HP

So based on what we did with the Dell burn in, follow this previous Linpack Runs link, some calculations:

- N calculation: 32 nodes, 12 gb each is 384 gb total which yields 48 gb double precision (8 byte) elements … 48 gb is 48*1024*1024*1024 = 51,539,607,552 … take the square root of that and round 227,032 … 80% of that is 181,600

- NB: start with 64, then 128, try 192 …

- PxQ: perfect square of 16×16=256, the number of cores we have.

Next create the machines files that list the hostname for each core.

for i in `seq 1 32`

do

for j in `seq 1 8`

do

echo n${i}-ib0 >> machines

done

done

Note that we're running via the hostname-ib0 port, that is the infiniband port. Probably does not matter but that way we'll stay off the provisioning switch and should see the Voltaire switch light up.

Simple script for invocation.

#!/bin/bash export PATH=/opt/openmpi/1.4.2/bin:$PATH export LD_LIBRARY_PATH=/opt/openmpi/1.42./lib:/home/hptest/test/lib64/atlas_GenuineIntel_x86_64 mpirun -n 256 --hostfile machines ./xhpl > hpl.log 2>&1 &

Results

And about the best results (1.5 teraflops) we found, was with

- N = 191,600

- NB of 128

- PxQ = 16 x 16

T/V N NB P Q Time Gflops ---------------------------------------------------------------------------- WR00R2C4 181600 128 16 16 2642.49 1.511e+03 ---------------------------------------------------------------------------- ||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0.0241238 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0.0097160 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0.0016592 ...... PASSED ============================================================================ T/V N NB P Q Time Gflops ---------------------------------------------------------------------------- WR00R2R2 181600 128 16 16 2649.93 1.507e+03 ---------------------------------------------------------------------------- ||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0.0246131 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0.0099131 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0.0016929 ...... PASSED ============================================================================ T/V N NB P Q Time Gflops ---------------------------------------------------------------------------- WR00R2R4 181600 128 16 16 2644.63 1.510e+03 ---------------------------------------------------------------------------- ||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0.0231181 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0.0093110 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0.0015901 ...... PASSED ============================================================================

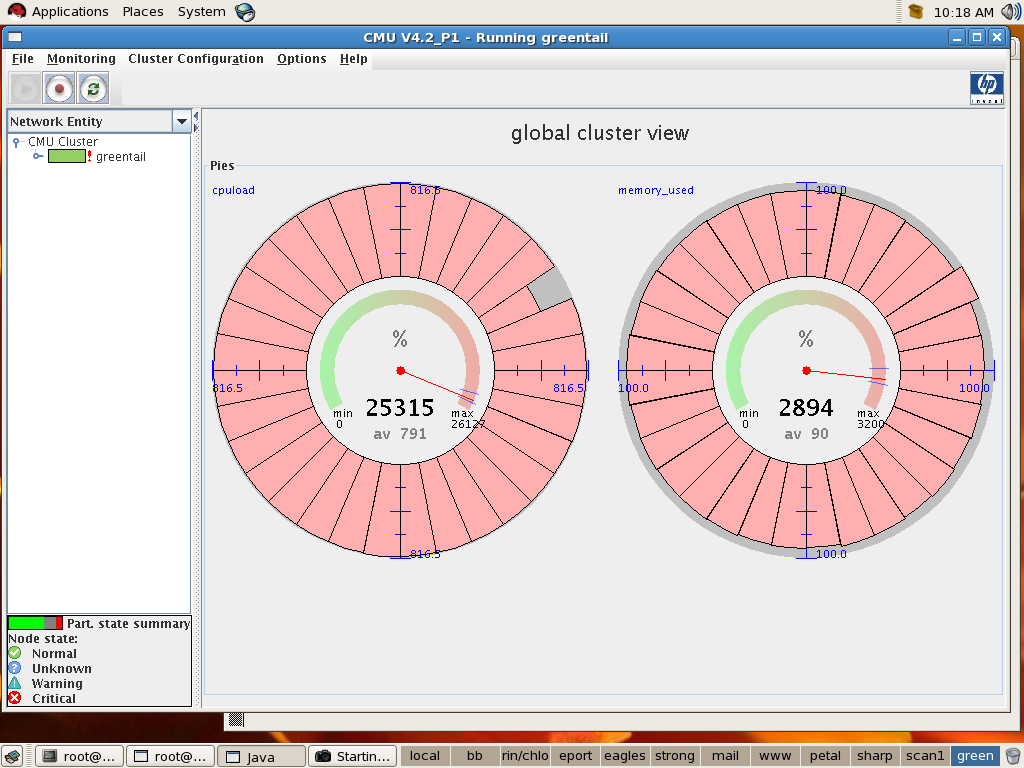

Image

Hmm

And that revealed a host with 10 gb memory instead of 12gb.

[root@greentail Linux_PII_CBLAS]# pdsh grep MemTotal /proc/meminfo n10: MemTotal: 12290464 kB n26: MemTotal: 12290464 kB n13: MemTotal: 12290464 kB n3: MemTotal: 12290464 kB n2: MemTotal: 12290464 kB n9: MemTotal: 12290464 kB n23: MemTotal: 12290464 kB n30: MemTotal: 12290464 kB n28: MemTotal: 12290464 kB n1: MemTotal: 12290464 kB n31: MemTotal: 12290464 kB n20: MemTotal: 12290464 kB n27: MemTotal: 12290464 kB n25: MemTotal: 12290464 kB n15: MemTotal: 12290464 kB n16: MemTotal: 12290464 kB n18: MemTotal: 12290464 kB n29: MemTotal: 12290464 kB n6: MemTotal: 12290464 kB n7: MemTotal: 12290464 kB n5: MemTotal: 12290464 kB n24: MemTotal: 12290464 kB n32: MemTotal: 12290464 kB n19: MemTotal: 12290464 kB n12: MemTotal: 12290464 kB n22: MemTotal: 12290464 kB n8: MemTotal: 12290464 kB n11: MemTotal: 12290464 kB n4: MemTotal: 12290464 kB n14: MemTotal: 12290464 kB n17: MemTotal: 12290464 kB n21: MemTotal: 10221992 kB <--- hmm

Dell

Since the cluster will be shut down December 28th we have an opportunity to run Linpack on the Dell cluster.

- ETHERNET

- N calculation: 20 nodes, 4/8/16 gb mix for a total of 192 gb which yields 24 gb double precision (8 byte) elements … 24 gb is 24*1024*1024*1024 = 25,769,803,776 … take the square root of that and round 160529 … 80% of that is 128,423

- NB: start with 64, then 128, try 192 …

- PxQ: perfect square of 10×16=160, the number of cores we have

============================================================================ T/V N NB P Q Time Gflops ---------------------------------------------------------------------------- WR00L2L2 40800 128 10 16 184.42 2.455e+02 ---------------------------------------------------------------------------- ||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0.0069974 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0.0105682 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0.0020883 ...... PASSED ============================================================================

- INFINIBAND

- N calculation: 16 nodes, 8 gb per node for a total of 128 gb which yields 16 gb double precision (8 byte) elements … 16 gb is 16*1024*1024*1024 = 17,179,869,184 … take the square root of that and round 131,072 … 80% of that is 104,850

- NB: start with 64, then 128, try 192 …

- PxQ: perfect square of 10×16=160, the number of cores we have

============================================================================ T/V N NB P Q Time Gflops ---------------------------------------------------------------------------- WR00L2L2 52425 64 11 11 294.28 3.264e+02 ---------------------------------------------------------------------------- ||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0.0059082 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0.0133907 ...... PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0.0024480 ...... PASSED ============================================================================

So a total of 2.455e+02 + 3.264e+0 or about 572 Gflops, 0.5 teraflops.

BSS

Since the cluster will be shut down December 28th we have an opportunity to run Linpack on the sharptail cluster.

- N calculation: 46 nodes, 24 gb per node for a total of 1,104 gb which yields 138 gb double precision (8 byte) elements … 138 gb is 138*1024*1024*1024 = 148,176,371,712 … take the square root of that and round 384936 … 80% of that is 307,950

- NB: start with 64, then 128, try 192 …

- PxQ: perfect square of 9×10=92, close to the number of cores we have.

Hmm, unable to make this work across all the nodes at the same time. Not sure why. My estimates are that with 92 cores and 1,126 gb of memory this cluster should be able to do 500-700 Gflops.