Table of Contents

The “information dive” into enterprise storage was an educational one. This write up is more for my note taking so I can keep track of and recall things.

The Storage Problem

In a commodity HPC setup deploying plain NFS, bottle necks can develop. Then the compute nodes hang and a cold reboot of the entire HPCC is needed. NFS clients on a compute node may contact NFS daemons on our file server sharptail and ask for say a file. The NFS daemon assigned the task then locates the content via metadata (location, inodes, access, etc) on the local disk array. The NFS daemon collects the content and hands it off to the NFS client on compute node. So the data passes thru the entire NFS layer.

A Solution

“Parallelize” the access by separating file system content from file system metadata (lookup operations). To use Panasas terms, Director blades service the metadata lookups of a file system (where what is, access permissions etc) and Storage blades contain the actual data. Now when a NFS client contacts to a Director, once the Director figures out where what is and it's ok to proceed, the NFS client gets connected directly to the appropriate Storage blade for content retrieval. Big picture.

Here are three enterprise solutions I got quotes for. Two are object based storage (content gets written out multiple times across multiple blades, one is not (Netapp). The last solution is continue as is but expand.

http://www.panasas.com/

Got a quote for an ActiveStor 16 single shelf 66T storage containing 12 blades (fully populated). There were 3 Director + 8 Storage blades for redundancy. That yields about ~45T usable storage space. It is object based so each information block gets written out multiple times. Each blade contains an SSD flash area for quick read/writes back ended with slower disks.

To leverage our Infiniband framework we need to include an FDR IB router. In order to connect our 1G Ethernet framework we need an 10G Ethernet switch. 1 year warranty, promotional offer. Without needed switches the quote closed in on Netapp nicely.

I was told deduplication is included but I see no proof of that on their web site. The file system is proprietary (PanFS). No snapshots, but not really needed with object based file system. We can do that with another tool, rsnapshot.org and store snaps on another box.

First, the Infiniband side. Think of different levels of connectivity speeds; FDR=14x, QDR=4x, DDR=2xa and SDR=1x … the speed of 1G Ethernet. (Absurdly roughly, Infiniband is a much smarter topology manager, it continually figures optimal pathways versus Ethernet pathways which are static, so if a pathway is choked, you wait). So on the back of the Panasas shelf a premier FDR connection is added by the IB router (internally the shelf is 10G Ethernet). But then we immediately drop performance connecting to our QDR switch which is then connected to the DDR switch. That does not seem like an efficient use of capabilities and is necessitated because we serve /home up using an NFS mount over Infiniband using IPoIB (see below).

Second, the Ethernet side. On the back of the Panasas shelf are 2x10G Ehternet ports which can not step down to 1G Ethernet. So one could bond them (essentially creating a 20G pipe) to a 10G Ethernet switch (24 ports). There, ports can be stepped down to 1G to hook up our non-Infiniband enabled nodes (queue tinymem, petaltail, swallowtail). That leaves a lot of unused 10G ports (for future 10G nodes?).

IPoIB

So that raises the IPoIB question. We're essentially a dual 1G Ethernet network (192.168.x.x for scheduler/provisioning and 10.10.x.x for data being NFS mounts of /home, /sanscratch). We leverage our Inifiniband network by being able to do NFS mounts using IPoIB but maybe we should dedicate our Infiniband network to the MPI protocol. We do not need for /home to be fast, just 99.999% availability. Content should be staged in scratch areas for fast access.

If we do dedicated Infiniband to MPI, all compute nodes need two 1G Ethernet connections to spread the traffic mentioned above. Currently the only ones that are not (but capable) are the nodes in queue hp12.

http://www.purestorage.com/

This group came knocking at my door stating I really needed their product. Before I agreed to meet them I asked for a budget quote (which I have not received yet) anticipating a monetary value in our budget stratosphere.

This solution is a hybrid model of Panasas. An all Flash Blade shelf with internally 40G Ethernet connectivity. Holds up to to 15 blades with a minimal population of 7. In Panasas terms, each blade is both a Director and a Storage blade. All blades are 100% flash, meaning no slow disks. Blades hold 8T SSDs. So 56T raw, their web site includes deduplication performance estimates (!), you make your own guess what's usable.

There are 8x 40G Ehternet ports on the back. I have no idea if they can step down on the shelf itself or if another 10G Ethernet switch is needed. I also do not know if that number decreases in a half populated shelf. Protocols NFS (which versions?) and S3 (interesting).

Until I see a quote, I'm parking this solution. Also means no IPoIB anymore for NFS.

http://www.netapp.com

So if we're dedicating Infiniband infrastructure to MPI, lets look at Netapp, which has no Infiniband solutions in their FAS offerings. (Wesleyan deploys mostly Netapp storage boxes so there is expertise in-house to leverage).

Netapp quoted me a FAS2554 which is a hybrid. A 24 disk shelf with either 20x2T (23T usable) or 20x4T (51T usable) slower disks. (There are larger options but they break my budget). It also holds 4x 800G SSDs to form a flashpool of about 1.4T usable, which is much larger than I expected, for speedy read and writes. Deduplication, snapshotting, CIFS, NFS (v3, v4 and pnfs), FCP, and iSCSI all included. 1 year warranty. All in an affordable price range if we commit to it. We gain

- 1.4T flashpool for fast read/writes

- robust Data Ontap OS with Raid 6 with dual parity (99.999% reliant)

- dual controller, hot swap, high availability, fail over

- parallel nfs (pnfs) native to the OS

- we could increase /home until local snapshots get in the way (daily, weekly, monthly)

- we could dedicate our current file server to be a remote snapshot storage device

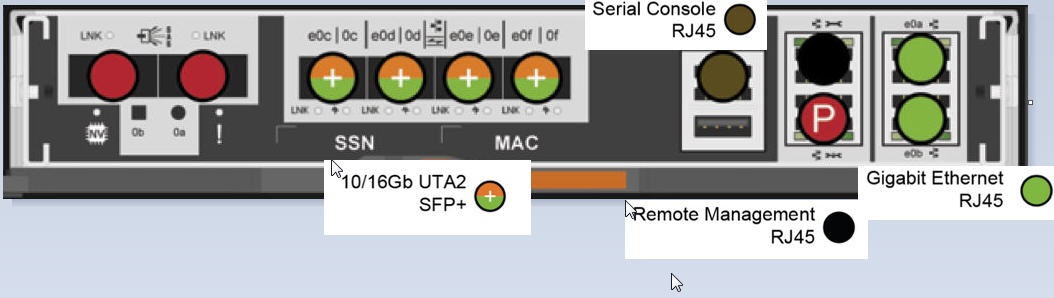

All very exciting features. So lets look at the connectivity. That needs a picture, here is the back of one controller, blown up (from blog.iops.ca)

Left red SAS ports for shelf connects, 2 of 4 UTA SFP+ ports for Cluster Interconnect communication, and the red RJ45 P port for Alternate Control Path, all needed by Data Ontap.

That leaves 2 of 4 SFP+ ports that we can step down from 40G/10G Ethernet via two cables X6558-R6 connecting SFP+ to SFP+ compatible ports. Meaning, hopefully we can go from FAS2554 to our Netgear GS724TS or GS752TS 1G Ethernet SFP Link/ACT ports. That would hook up 192.168.x.x and 10.10.x.x to the FAS2554. We need, and have, 4 of these ports on each switch available; once the connection is made /home can be pNFS mounted across.

Suggestion: The X6558-R6 comes up as a SAS cables. Ask if the Cisco Twin Ax cables would work with the Netgear? I suggest ordering the optics on both the NetApp and Netgear. (Note to self: I do not understand this).

Then ports e0a/e0b, green RJ45 ports to the right, connect to our cores switches (public and private) to move content from and to the FAS2554 (to the research labs for example). Then we do it again for the second controller.

So we'd have, lets call the whole thing “hpcfiler”, for the first controller

- 192.168.102.200/255.255.0.0 on e0e for hpcfiler01-eth0 (or e0c, hpc private for Openlava/Warewulf)

- 10.10.102.200/255.255.0.0 on e0f for hpcfiler01-eth1 (or e0d, hpc private for /home and /sanscratch)

- 129.133.22.200/255.255.255.128 on remote management for hpcfiler01-eth2

- 129.133.52.200/255.255.252.0 on e0a for hpcfiler01-eth3.wesleyan.edu (wesleyan public)

- 10.10.52.200/255.255.0.0 on e0b for hpcfiler01-eth4.wesleyan.local (this is a different 10.10 subnet, wesleyan private)

Then configure second controller. My hope is that if the config is correct, each controller can now “see” each path.

Q: Can we bond hpcfiler01-eth3.wesleyan.edu and hpcfiler02-eth3.wesleyan.edu together to their core switches? (same question for eth4's wesleyan.local)

A: No you cannot bond across controllers. But with Clustered ONTAP, every interface are setup like Active/Passive because if the hosting port for the LIF failed, it will fail over to appropriate port on the other controller based on the failover-group.

Awaiting word from engineers if I got all this right.

Supermicro

Then the final solution is to continue doing what we're doing by adding Supermicro integrated storage servers. The idea is to separate snapshotting, sanscratch and home read/write traffic. We've already put in motion to have greentail's HP MSA 48T disk array serve the /sanscratch file system. This includes the BLCR checkpointing activity which heavily writes to /sanscratch/checkpoints/

A 2U Supermicro with a single E5-1620v4 3.5 GHz chip (32G memory) with 2x80G disks (raid 1) can support 12x4T (raid 6) disks making roughly 40T usable. We'd connect this over Infiniband to file server sharptail and make it a snapshot host, taking over that function of sharptail. We could then merge /home and /snapshots for a larger /home (25T) on sharptail.

It's worth noting that 5 of these integrated storage servers fits the price tag of a single Netapp FAS2554 (the 51T version). So, you could buy 5 and split out /home into home1 thru home5. 200T, everybody can get as much disk space as needed. Distribute your heavy users across the 5. Mount everything up via IPoIB and round robin snapshot, as in, server home2 snapshots home1, etc.

Elegant, simple, and you can start smaller and scale up. We have room for 2 on the QDR Mellanox switch (and 2 up to 5 on the DDR Voltaire switch). Buying another QDR Mellanox adds $7K for an 18 port switch. IPoIB would be desired if we stay with Supermicro.

What's even more desired is to start our own parallel file system with BeeGFS

Short term plan

- Grab the 32x2T flexstorage hard drives and insert into cottontail's empty disk array

- Makes for a 60T raw raid 6 storage place (2 hot spares)

- move the sharptail /snapshots to it (remove that traffic from file server)

- Dedicate greentail's disk array to /sanscratch

- Remove /home_backup 10T

- Extend /sanscratch form 27T to 37T

- Dedicate sharptail's disk array to /home

- Keep old 5T /sanscratch as backup, idle

- Remove 15T /snapshots

- Extend /home for 10T to 25T

- Keep 7T /archives until those users graduate, move to Rstore

Long term plan

- Start a BeeGFS storage cluster

- cottontail as MS (management server)

- sharptail as AdMon (monitor server) and proof of concept storage OSS

- pilot storage on idle /sanscratch/beegfs/

- also a folder on cottonttail:/snapshots/beegfs/

- n38-n45 (8) as MDS (metadata servers, 15K local disk, no raid)

- Buy 2x 2U Supermicro for OSS (object storage servers for a total of 80T usable, raid 6, $12.5K)

- Serve up BeeGFS file system using IPoIB

- Move /home to it

- Backup to older disk arrays

- Expand as necessary