Table of Contents

Job Slots

I was asked in the UUG meeting yesterday how one determines how many job slots are still available. Turns out to be a tricky question. In CluMon you might observe one host with only one JOBPID running yet it is declared 'Full' by the scheduler. This would be a parallel job claiming all job slots with the “bsub -n N …” parameter. Other hosts may be listing anywhere between 1 to 8 JOBPIDs.

There may be a mix of serial and parallel jobs. So how do you find out how many job slots area still available?

Refresher 1

Current members of our “core” queues. Our “core” queues are identify as those light weight nodes on the Infiniband switch, those light weight nodes on the gigE Ethernet switch, and the heavy weight nodes (also on gigE ehternet switch).

| Queue Name: 16-ilwnodes | light weight nodes, Infiniband + GigE switches |

|---|---|

| Host Names: compute-1-1 … compute-1-16 | all in rack #1 |

| Queue Name: 16-lwnodes | light weight nodes, GigE switch only |

| Host Names: compute-1-17 … compute-1-27 | all in rack #1 |

| Host Names: compute-2-28 … compute-2-32 | all in rack #2 |

| Queue Name: 04-hwnodes | heavy weight nodes, GigE switch only |

| Host Names: nfs-2-1 … nfs-2-4 | all in rack #2 |

Refresher 2

Our nodes contain dual quad core processors. So each node has 2 physical processors, and each processor has 4 cores. Each node then has 8 cores. These cores share access to either 4 Gb (light weight) or 16 Gb (heavy weight) of memory. Although the terminology differs between applications, think of a core as a job slot. The scheduler will schedule up to 8 jobs per node, then consider that node 'Full'. It is assumed that each job (read task) utilizes the core assigned by the operating system to its full potential.

So despite Gaussian's parameter Nprocs or the BSUB parammeter -n (processors) or the mpirun parameter -np (number of processors) … in all these cases they refer to cores which implies job slots. This is a result of chipsets developing faster than the software. Basically, in a configuration file for the scheduler we can assign the number of job slots per host, or type of host.

Side Bar: i'm temporarily overloading the queue 16-lwnodes by defining 12 jobs slots to be available per host. The reason for this is that i'm observing jobs with very little memory requirements particularly with regard to serial jobs. So a host may have 8 jobs running but still have 3 Gb of memory free. As long as the host is swapping memory lightly, we could increase the job throughput a bit. I was told that some sites go as far as overloading 4:1 the slots per node ratio but i was advised not to push it beyond 2:1 unless our jobs are very homogeneous. Which they are not.

Example 1

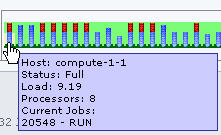

In this example, the node is flagged as 'Full' despite only showing one JOBPID. When we query the scheduler for what is running, we find out at a single job has requested 8 job slots thereby exhausting all job slots. Note the information under EXEC_HOST.

[root@swallowtail ~]# bjobs -m compute-1-1 -u all JOBID USER STAT QUEUE FROM_HOST EXEC_HOST JOB_NAME SUBMIT_TIME 20548 qgu RUN idle swallowtail 8*compute-1-1 run101 Oct 17 20:06

Example 2

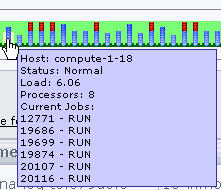

In this example, the node is flagged as 'Normal' and lists 6 JOBPIDs. When we query the scheduler for what is running, we find out that 6 serial jobs are running. Hence 2 more job slots are still available in a standard configuration (see side bar).

[root@swallowtail ~]# bjobs -m compute-1-18 -u all JOBID USER STAT QUEUE FROM_HOST EXEC_HOST JOB_NAME SUBMIT_TIME 12771 chsu RUN 16-lwnodes swallowtail compute-1-18 dna-dimer/m32/t0.103-4 Aug 21 11:34 19686 chsu RUN 16-lwnodes swallowtail compute-1-18 grand-canonical/t0.170/mu0.54 Sep 30 11:26 19699 chsu RUN 16-lwnodes swallowtail compute-1-18 grand-canonical/t0.140/mu0.48 Sep 30 12:03 19874 chsu RUN 16-lwnodes swallowtail compute-1-18 dna-GC/m16/t0.0925/mu24.0 Oct 4 16:38 20107 chsu RUN 16-lwnodes swallowtail compute-1-18 grand-canonical/t0.110/mu0.43 Oct 12 22:01 20116 chsu RUN 16-lwnodes swallowtail compute-1-18 grand-canonical/t0.100/mu0.43 Oct 12 22:01

Side Bar: Since we are currently defining 12 available job slots for this host there still are 12-6 = 6 job slots available. This is one of the experimental “overloaded” hosts i'm watching. It's processors are currently working heavily with no swap activity. Yet there is a whopping 3.7+ Gb of memory and swap available. The disk I/O rate is very low at 131 KB/sec. I'd like to see what happens with 12 identical serial jobs.

[root@swallowtail ~]# lsload -l compute-1-18 HOST_NAME status r15s r1m r15m ut pg io ls it tmp swp mem compute-1-18 ok 6.0 6.0 6.0 75% 6.8 131 0 9 7088M 3782M 3712M

Example 3

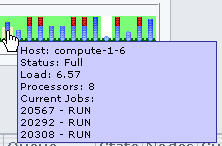

In this example, the node is flagged as 'Full' and lists 3 JOBPIDs. When we query the scheduler for what is running, we find out that a mixture of jobs are running.

[root@swallowtail ~]# bjobs -m compute-1-6 -u all JOBID USER STAT QUEUE FROM_HOST EXEC_HOST JOB_NAME SUBMIT_TIME 20567 wpringle RUN idle swallowtail 6*compute-1-13:1*compute-1-6:1*compute-1-7 run101 Oct 18 10:43 20292 gng RUN idle swallowtail compute-1-6 poincare3.bat Oct 17 11:16 20308 qgu RUN idle swallowtail 6*compute-1-6 run101 Oct 17 13:05

There is a parallel job (20567, 1*compute-1-6) that takes up one job slot on this host. There is another parallel job (20308, 6*compute-1-6) that takes up 6 job slots on this host. Plus one serial job is running (20292). A total of 8 job slots, hence the host has no more job slots available.

Availability

So is there a way to get the total number of job slots available on a queue basis?

Not easily from what i can tell. We could write a script to calculate this for us. If that would be convenient, let me know. However, that would only be useful information if no jobs were in a pending state.

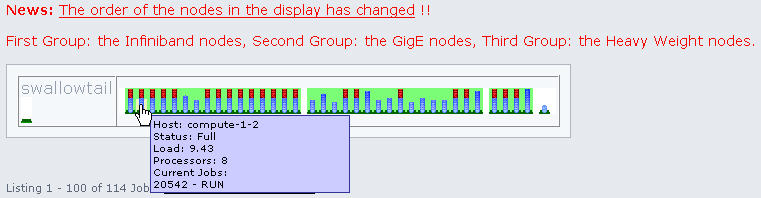

I should hack up the Clumon page some day so that the node icons line themselves up in a convenient order for us: 16-ilnodes (1-16), 16-lwnode (17-32), 04-hwnodes (1-4).

Fixed !!

— Meij, Henk 2007/10/19 15:06