Home

HPCC 36 Node Design Conference with Dell

Questions/Issues

After the conference, with the erratic behavior of our freight elevator enlightening everybody, i think we have but a few questions to work on:

| Question/Issues | Answer |

| Shall we use the 2nd disk in the compute nodes as /localscratch? | yes |

| How large should the shared SAN scratch space /sanscratch be? | 1 TB with SAN thin provisioning |

| Shall we NFS mount directly from filer? (read about it) | no, configure the io node |

| Shall we add a second gigabit ethernet switch? (read about it) | this is a go! adding a Dell 2748 switch. also, add NIC3 for head node |

| Shall we license & install the Intel Software Tools Roll? | no, evaluate portland first, then evaluate intel, then decide |

| What should the user naming convention be (similar or dissimilar then AD)? | uid/gid from AD, set up some guest accounts in AD (this does not impact on-site install |

| These topics are discussed in detail on another page, click to go there. — Henk Meij 2007/01/31 11:31 |

| contact person: Carolyn Arredondo , engineer: Tony Walker |

Head Node

| FQDN |

| 129.133.1.224 |

| swallowtail.wesleyan.edu |

|  |  |

| interfaces |

| NIC1 | 192.168 | private network |

| NIC2 | 129.133 | wesleyan.edu |

| NIC3 | 10.3 | private network |

| HCA1 | na | infiniband |

| local disks |

| root | / | ext3 | 10 GB |

| swap | none | none | 4 GB |

| export | /state/partition1 | rest of disk |

| disks are striped & mirrored, make a /localscratch directory if needed |

| mounts | from | purpose |

| NFS | io node:/sanscratch | scratch space from SAN |

| NFS | io node:/home/users | home directories for general users |

| NFS | io node:/home/username | home directory for power user, repeat … |

| other |

| user logins permitted (ssh only, not VAS enabled) |

| what user naming convention? same or different as AD? |

| backups: nightly incremental backup via Tivoli |

| firewall shield: allow ssh (scp/sftp), bbftp, and http/https from 129.133.0.0/16 |

| NFS traffic on Cisco switch, management/interconnect traffic on Dell switch |

IO Node

| interfaces |

| NIC1 | 192.168 | private network |

| NIC2 | 10.3 | private network |

| local disks |

| root | / | ext3 | 80 GB |

| swap | none | none | 4 GB |

| disks are striped & mirrored, no /localscratch needed |

| fiber | from host:volume | local mount | size | backup |

| Vol0 | filer2:/vol/cluster_scratch | /sanscratch | 1TB thin provisioned | no snapshots |

| Vol1 | filer2:/vol/cluster_home | /home | 10 TB thin provisioned | one weekly snapshot? |

| two 30 MB volumes were made to work with until NetApp disks arrive |

| exports |

| Vol0/LUN0 exported as /sanscratch |

| Vol1/LUN0 exported as /home/users … for regular users, so user jdoe's home dir is /home/users/jdoe |

| Vol1/LUN1 exported as /home/username … for power user home dir, repeat … |

| other |

| no user logins permitted, administrative users only |

| backups: not sure how many snapshots we can support on 10 TB |

| NFS traffic on Cisco switch, management/interconnect traffic on Dell switch |

Compute Nodes

| interfaces |

| NIC1 | 192.168 | private network (16+16+4) |

| NIC2 | 10.3 | private network (16+16+4) |

| HCA1 | na | infiniband (16) |

| local disks | same as head node |

| root | / | ext3 | 10 GB |

| swap | none | none | 4 GB |

| export | /state/partition1 | rest of disk |

| LWN: only first disk is used, mount second disk (80 GB) as /localscratch |

| HWN: 7*36 GB MD1000 15K RPM disks, raid 0, dedicated storage to each node via scsi, on /localscratch |

| if heavy weights nodes have a second hard disk … treat as spares |

| mounts | from | purpose |

| NFS | io node:/sanscratch | scratch space from SAN |

| NFS | io node:/home/users | users home directories |

| NFS | io node:/home/username | power user home directory, … repeat |

| NFS | head node:/export/share | application/data space |

| other |

| no user logins permitted, administrative users only |

| backups: none |

| NFS traffic on Cisco switch, management/interconnect traffic on Dell switch |

Rolls

Question is do we install & license the Intel Software Roll or go with the Portland compilers?

| A set of ‘base’ components are always installed on a Platform OCS cluster while a Roll can be installed at any time. Available Platform Open Cluster Stack Rolls include: |

| * Available at an additional cost |

| # Free to non-commercial customers |

| YES | |

| Platform Lava Roll | Entry-level workload management provides commercial grade job execution, management and accounting. Based on Platform LSF |

| Clumon Roll | Cluster interface for viewing ‘whole’ cluster status. It is also a great cluster dashboard tool |

| Ganglia Roll | High level view of the entire cluster load and individual node load |

| Ntop Roll | Monitor traffic on ethernet interfaces. Useful for debugging network traffic problems and passively collects network traffic on interfaces |

| Cisco Infiniband™ Roll | IB drivers and MPI libraries from Cisco |

| Modules Roll | Customize your environment settings including libraries, compilers, and environment variables |

| NO | |

| Intel® Software Tools Roll* | Delivers Intel Compilers and Tools |

| PVFS2 Roll | Provides high speed access to data for parallel applications |

| IBRIX Roll | IBRIX Fusion™ parallel file system |

| MatTool Roll# | Application for basic system management. Manage disks, DNS, users and temporary files |

| Myricom Myrinet® Roll | MPI libraries and Myrinet Drivers from Myricom |

| Platform LSF HPC Roll* | Intelligently schedule parallel and serial workloads to solve large, grand challenge problems while utilizing your available computing resources at maximum capacity |

| Intel MPI Runtime Roll | Libraries for running Applications compiled with Intel MPI |

| Volcano Roll | Simple cluster portal for Platform Open Cluster Stack. Enables job submission, Linpack as a single user account |

| Extra Tools Roll | Benchmark and Debugging Tools |

| Dell™ Roll | Dell drivers and scripts to configure compute nodes for IPMI support |

Queues

have not thought about this real deep yet, but a grab bag collection would be

| Queue | Nodes | CPUs | Cores | Notes |

| HWN | 04 | 08 | 032 | large memory footprint (16 Gb), dedicated, fast, local scratch space |

| LWN | 16 | 32 | 128 | small memory footprint (04 Gb), shared, slower, SAN mounted scratch space |

| LWNi | 16 | 32 | 128 | small memory footprint (04 Gb), shared, slower, SAN mounted scratch space, infiniband |

| debug | 01 | 02 | 008 | on head node? |

| debugi | 01 | 02 | 008 | on head node? is this even useful? |

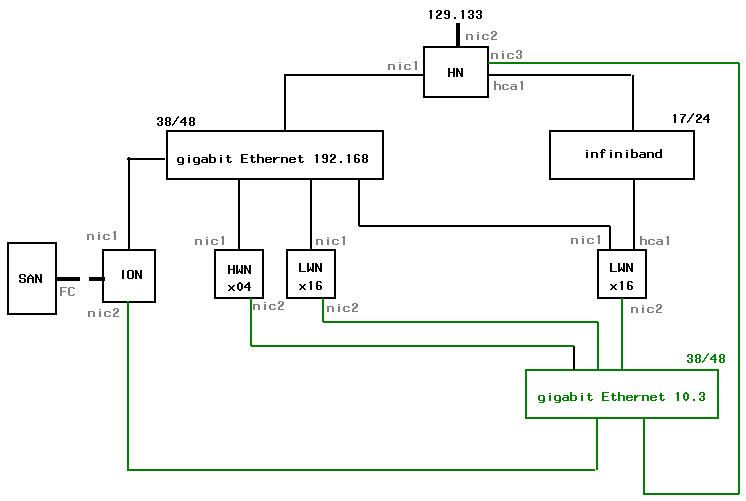

Design Diagram

Just one more switch, and an additional NIC for the head node, would do so much good! Suggested was to route the NFS traffic through the Cisco gigabit ethernet switch and buy and additional 48 port gigabit ethernet switch like a Dell 2748 switch. The administrative traffic and interconnect traffic would then use this second switch.

If this is an option by the time of the configuration, some additional steps would present themselves.

activate NIC2 on io node and connect to second private network 10.3

activate NIC2 on all compute nodes and connect to 10.3

insert NIC3 into head node and connect to 10.3

make sure system /etc/hosts, or Lava's 'hosts' file, is properly set up

make sure /etc/fstab is correct mounting io node's /home and /sanscratch via 10.3

(i think that's it)

One alternative is to NFS mount directly to SAN via a private network (means a new interface is needed for the SAN). This would essentially bypass the io node and certainly provide better performance if on a new private network from the SAN. This would work with one or two gigabit ethernet switches. The “idle io node” could then be a node for the debug queues and/or serve as a “backup head node” if needed.

Please note the Summary Update comments regarding the connection of the head node to the infiniband switch

— Henk Meij 2007/04/10 09:52

Home