Table of Contents

Deprecated. We only have rack running (on demand) offering access to 1.1 TB of memory. The bss24 queue on head node greentail represents the Blue Sky Studio job slots available. — Meij, Henk 2013/04/18 15:39

Update: 21 Sept 09

Cluster sharptail has undergone some changes. Courtesy of ITS, 2 more blade enclosures have permanently been added. Another 3 blade enclosures have temporarily been added (destined for another ITS project so they may disappear in the future but in the meantime I figure it could be put to good use). That means

Cluster sharptail has 129 compute nodes, 258 job slots, and a total memory footprint of 2,328 GB Cluster swallowtail has 36 compute nodes, 288 job slots, and a total memory footprint of 244 GB

On cluster sharptail, four queues have been set up. Also, Gaussian optimized for AMD Opteron chips as well as a Linda version are available.

https://dokuwiki.wesleyan.edu/doku.php?id=cluster:73#gaussian

We’ll have some documentation soon, but in the meantime there are links to resources on the web.

Queue ‘bss12’ and ‘bss24’ are comprised of nodes with either 22 GB or 24 GB of memory per node (2 single core CPUs). When using Linda, the target node names need to be listed (somewhat silly) instead of letting the scheduler find free nodes. So queues ‘bss12g16’ and ‘bss24g16’ have been set up with 8 predefined hosts each. These queues will not accept jobs requiring less than 16 job slots, the idea being you submit Linda jobs with #BSUB –n 16 and %NProcs=16.

Cluster: sharptail

From the Blue Sky Studios donations of hardware we have created a high performance compute cluster. Named sharptail. A sharptail saltmarsh sparrow is a secretive and solitary bird, seldomly seen most often only heard by its splendid song, which inhabits a very narrow coastal habitat stretching from Maine to Florida.

Because of limitations in the hardware, cluster sharptail can only be reached by first establishing an SSH session with petaltail or swallowtail, and then an SSH session to sharptail. So the cluster sits entirely behind those login nodes.

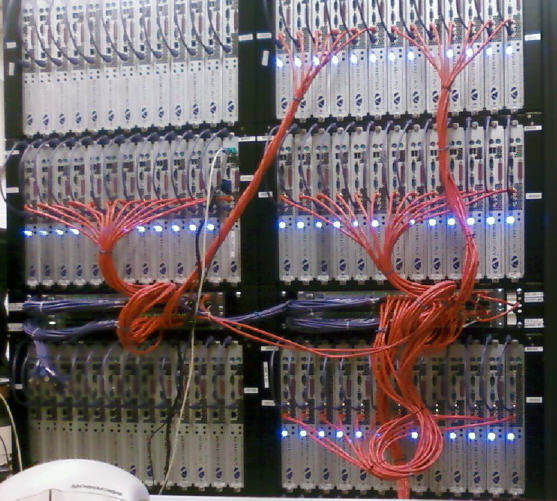

The cluster consists entirely of blades, 13 per enclosure. Currently 5 enclosures are powered. Each blade contains dual AMD Opteron model 250 CPUs (single core) running at 2.4 Ghz with a memory footprint of 12 gb per blade.

Like petaltail and swallowtail, the provision network runs over 192.168.1.xxx while all nfs traffic runs over the “other” network being 10.3.1.xxx. All blades have but a single 80 gb hard disk. After imaging roughly 50 gb is left over, which is presented as /localscratch on each blade.

The cluster is created, maintained and managed with Project Kusu, an open source software stack derived from Platform's OCS5 software stack.

The entire cluster is on utility power with no power backup for any blade, or the installer node sharptail. Your home directory is the same as on petaltail and swallowtail and is ofcourse being backed up.

The operating system is CentOS, version 5.3 at this time. This is much like Redhat but not completely. All software compiled in /share/apps has been compiled under RHEL 5.1 and hence may not work in CentOS 5.3. We will recompile software when requested and post it in /share/apps/centos53 … all commercial software will only run on petaltail and swallowtail.

The scheduler is Lava, that is, a recent version of LSF but not the latest. All regular commands for submitting jobs work the same way. The only difference is the advanced queue settings are missing like fairshare, pre-emption, backfill etc.

All nodes together add 128 job slots to our HPC environment. All on gigabit ethernet switches. To run programs in this environment submit them to the queue “bss12” (stands for blue sky studios, 12 gb ram). We might have a “bss24” queue in the near future with 24 gb ram blades. Node names follow the convention bss000-bss063.

As such, we will start to implement a soft policy that the Infiniband switch is dedicated to jobs that invoke MPI parallel programs. That is queue “imw” on petaltail and swallowtail.

There are still some minor configurations that need to be implemented but you are invited to put cluster sharptail to work.

This page will be updated with solutions to problems found or questions asked.

All your tools will remain on petaltail.wesleyan.edu

Questions

Are the LSF and Lava scheduler working togther?

No. They are two physically and logically individual cluster.

How can i determine if my program or the program i am using will work on sharptail?

When programs compile on a certain host, they link themselves against system and sometimes custom libraries. If they are missing on another host, the program will not run. To check, use 'ldd', if none are missing you are good to go.

[hmeij@sharptail ~]$ ldd /share/apps/python/2.6.1/bin/python

libpthread.so.0 => /lib64/libpthread.so.0 (0x0000003679e00000)

libdl.so.2 => /lib64/libdl.so.2 (0x0000003679600000)

libutil.so.1 => /lib64/libutil.so.1 (0x0000003687000000)

libm.so.6 => /lib64/libm.so.6 (0x0000003679a00000)

libc.so.6 => /lib64/libc.so.6 (0x0000003679200000)

/lib64/ld-linux-x86-64.so.2 (0x0000003678e00000)

If one or more libraries are missing, you could run this command on petaltail or swallowtail, observe were the libraries are located, and if they exist on sharptail, add that path to LD_LIBRARY_PATH. Otherwise the programs need to be recompiled if they are supported on CentOS 5.3 x86_64.