Table of Contents

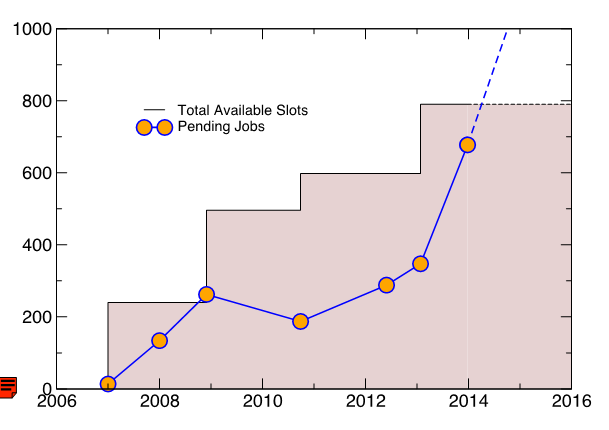

Jobs Pending Historic

Report

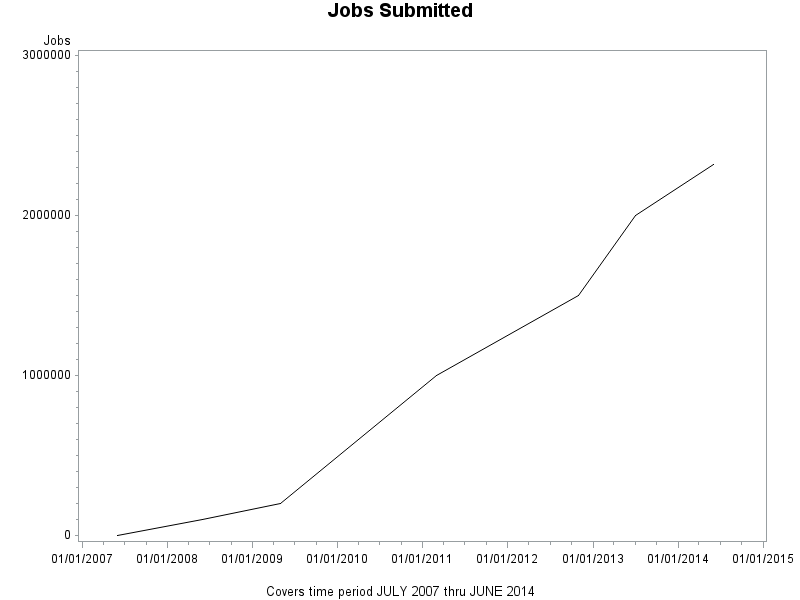

Total Jobs Submitted

Just because I keep track ![]() , 2 millionth milestone reached in July 2013.

, 2 millionth milestone reached in July 2013.

A picture of our total number of job slots availability and cumulative total of jobs processed.

"Date","Type","TSlots","TJobs","CPU Teraflops" 06/01/2007,total,240,0,0.7 06/01/2008,total,240,100000,0.7 05/01/2009,total,240,200000,0.7 03/01/2011,total,496,1000000,2.2 11/01/2012,total,598,1500000,2.5 07/01/2013,total,598,2000000,2.5 06/01/2014,total,790,2320000,7.1

Accounts

- Users

- About 200 user accounts have been created since early 2007.

- At any point in time there may be 12-18 users active, it rotates in cycles of activity.

- There are 22 permanent collaborator accounts (faculty/researchers at other institutions).

- There are 100 generic accounts for class room use (recycled per semester).

- Perhaps 1-2 classes per semester use the HPCC facilities

- Depts

- ASTRO, BIOL, CHEM, ECON, MATH/CS, PHYS, PSYC, QAC, SOC

- Most active are CHEM and PHYS

Hardware

This is a brief summary description of current configuration. A more detailed version can be found in the Brief Guide to HPCC write up.

- 52 old nodes (Blue Sky Studio donation, circa 2002) provide access to 104 job slots and 1.2 TB of memory.

- 32 new nodes (HP blades, 2010) provide access to 256 job slots and 384 GB of memory.

- 13 newer nodes (Microway/ASUS, 2013) provide access to 396 job slots and 3.3 TB of memory.

- 5 Microway/ASUS nodes provide access to 20 K20 GPUs for a total of 40,000 cores and 160 GB of memory.

- Total computational capacity is near 30.5 Teraflops (million million instructions per second).

- The GPUs account for 23.40 Teraflops of that total (double precision, single precision is in the 100+ range).

- All nodes (except the old nodes) are connected to QDR Infiniband interconnects (switches)

- This provides a high throughput/low latency environment for fast traffic.

- /home is served over this environment using IPoIB for fast access.

- All nodes are also connected to gigabit standard ethernet switches.

- The Openlava scheduler using this environment for job dispatching and monitoring.

- The entire environment is monitored with custom scripts and ZenOSS

- Two 48 TB disk arrays are present in the HPCC environment, carved up roughly

- 10 TB each sharptail:/home and greentail:/oldhome (latter for disk2disk backup)

- There is no “off site” backup destination

- 5 TB each sharptail:/sanscratch and greentail:/sanscratch (scratch space for nodes n33-n45, n1-n32 & b0-b51, respectively)

- 15 TB sharptail:/snapshots (for daily, weekly and monthly backups)

- 15 TB greentail:/oldsnapshots to be reallocated (currently used for virtualization tests)

- 7 TB each sharptail:/archives_backup and greentail:/archives (for static users files not in /home)

Software

There is an extensive list of software installed detailed at this location Software. Some highlights:

- Commercial software

- Matlab, Mathematica, Stata, SAS, Gaussian

- Open Source Examples

- Amber, Lammps, Omssa, Gromacs, Miriad, Rosetta, R/Rparallel

- Main Compilers

- Intel (icc/ifort), gcc

- OpenMPI mpicc

- MVApich2 mpicc

- Nvidia nvcc

- GPU enabled software

- Matlab, Mathematica

- Gromacs, Lammps, Amber, NAMD

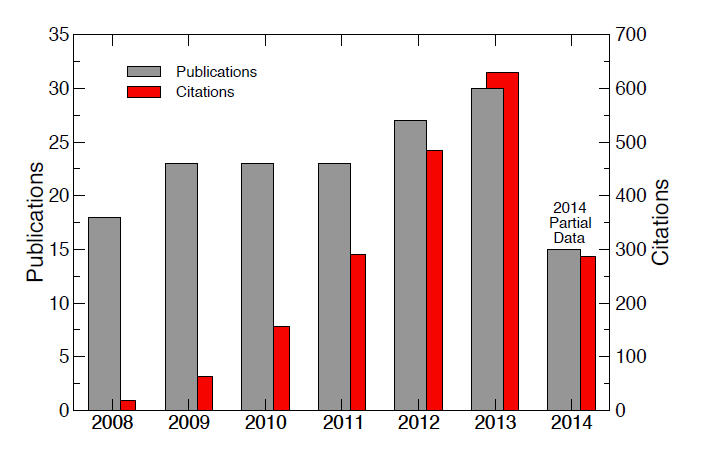

Publications

A summary of articles that have used the HPCC (we need work on this!)

Expansion

Our main problem is that of flexibility. Our cores are fixed per node. One or many small memory jobs running on the Microway nodes idles large chunks of memory. To provide a more flexible environment, virtualization would be the solution. Create small, medium and large memory templates and then clone nodes from the templates as needed. Recycle the nodes when not needed anymore to free up resources. This would also enable us to serve up other operating systems if needed (Suse, Ubuntu, Windows).

Several options are available to explore:

These options would require sufficiently sized hardware that than logically can be presented as virtual nodes (with virtual CPU, virtual disk and virtual network on board).

[root@sharptail ~]# for i in `seq 33 45`; do bhosts -w n$i| grep -v HOST; done HOST_NAME STATUS JL/U MAX NJOBS RUN SSUSP USUSP RSV n33 ok - 32 20 20 0 0 0 n34 ok - 32 21 21 0 0 0 n35 ok - 32 28 28 0 0 0 n36 ok - 32 28 28 0 0 0 n37 ok - 32 20 20 0 0 0 n38 closed_Adm - 32 16 16 0 0 0 n39 closed_Adm - 32 20 20 0 0 0 n40 ok - 32 23 23 0 0 0 n41 ok - 32 23 23 0 0 0 n42 ok - 32 28 28 0 0 0 n43 ok - 32 23 23 0 0 0 n44 ok - 32 25 25 0 0 0 n45 ok - 32 23 23 0 0 0 [root@sharptail ~]# for i in `seq 33 45`; do lsload n$i| grep -v HOST; done HOST_NAME status r15s r1m r15m ut pg ls it tmp swp mem n33 ok 23.0 22.3 21.9 50% 0.0 0 2e+08 72G 31G 247G n34 ok 22.8 22.2 22.1 43% 0.0 0 2e+08 72G 31G 247G n35 ok 29.8 29.7 29.7 67% 0.0 0 2e+08 72G 31G 246G n36 ok 29.2 29.1 28.9 74% 0.0 0 2e+08 72G 31G 247G n37 -ok 20.9 20.7 20.6 56% 0.0 0 2e+08 72G 31G 248G n38 -ok 16.0 10.9 4.9 50% 0.0 0 2e+08 9400M 32G 237G n39 -ok 20.4 21.1 22.1 63% 0.0 0 2e+08 9296M 32G 211G n40 ok 23.0 23.0 22.8 76% 0.0 0 2e+08 9400M 32G 226G n41 ok 23.0 22.4 22.2 72% 0.0 0 2e+08 9408M 32G 226G n42 ok 23.3 23.5 23.1 70% 0.0 0 2e+08 9392M 32G 236G n43 ok 22.8 22.8 22.7 65% 0.0 0 2e+08 9360M 32G 173G n44 ok 25.1 25.1 25.0 78% 0.0 0 2e+08 9400M 32G 190G n45 ok 23.0 22.9 22.6 64% 0.0 0 2e+08 9400M 32G 226G

Costs

Here is a rough listing of what costs the HPCC generates and who pays the bill. Acquisition costs have so far been covered by faculty grants and the Dell hardware/Energy savings project.

- Grants

- First $270K

- Second $270K (first purchase $155K, second purchase $120K)

- Dell replacement $62K

- Recurring

- Sysadmin, 0.5 FTE of a salaried employee, annual.

- Largest contributor dollar wise, ITS.

- Energy consumed in data center for power and cooling, annual.

- At peak performance 30.5 KwH for power is estimated, that translates to $32K/year.

- Add 50% for cooling energy needs (we're assuming efficient, green hardware)

- Total energy costs: at 75% performance $36K/year, Physical Plant.

- Software (Matlab, Mathematica, Stata, SAS)

- Renewals included in ITS/ACS software suite, annual.

- Hard to estimate, low dollar number.

- Miscellanea

- Intel Compiler, once in 3-4 years.

- Each time we buy new racks), $2,500, ITS/ACS.

- One year of extended HP hardware support.

- To cover period /home moving from greentail to sharptail, $2,200, ITS/TSS.

- Contributed funds to GPU HPC acquisition

- DLB Josh Boger, $5-6K

- QAC $2-3K

- Physics $1-2K