Table of Contents

NA VTL Test Scenario

Keeping it all together on a single page for reference.

To Do

- 1) review client option set, 1st email on this was sent in September;

- 2) setup local disk pool (however not that urgent as the initial clients can go directly to VTL);

- 3) setup schedules for clients, including morning schedules as on blackhawk;

- 4) adding SAN LUN space to Linux host for db volumes (not urgent as db can stay on local disk to start with).

For creating nodes on new TSM, we can expand or clone the eP app so that new nodes can be created on TSM instance via eP app. We need to re-visit TSM node password node change policy. I recall you recommend setting password for 3 days (current 14 days on blackhawk), I am unsure whether should make this change.

Server, Uncompressed

Important to point out that we're using a dedicated library and only port 0a for host access.

| Uncompressed data stream flowing to VTL 700 | ||

|---|---|---|

| Client | TSM Reports | |

| its=swallowtail-local | 32.61 GB | 43 MB/s |

| its=swallowtail | 204.61 GB | 3.4 MB/s |

| its-swallowtail2 | 636.45 GB | 10 MB/s |

| dragon_home | 104.54 GB | 1.7 MB/s |

| dragon_data | 23.74 GB | 0.4 MB/s |

| streaming | 649.15.GB | 10 MB/s |

| media | 482.50 GB | 8 MB/s |

| openmedia | 248.23 GB | 7 MB/s |

| digitization | 208.59 GB | 8 MB/s |

| courses | 219.27 GB | 3.7 MB/s |

| condor_home | 110.11 GB | 1.8 MB/s |

| Total | 2,919.8 | |

All jobs crashed roughly after 17 hrs and 20 mins when database exceeded it's allocation. That yields a throughput of (17*60)+20=1040 mins or (2,919.8*1024)/(1040*60) = 48 MB/s or 2.8 GB/min or 4.1 TB/day.

We observed total drive throughput in the order of 100 Mb/s so this deteriorated quite a bit. According to the VTL statistics page a total of 3131.51 GB was written taking up 2580.67 GB of space yielding a compression ratio of 1.2

That is using one host and one fiber card. Throughput could be doubbled.

But if we take TSM total bytes transfer the compression ratio becomes 2,919/2,581 or 1.13

Server,Desktop Compare

From Monitoring Page

| Files | Capacity Used Before | Capacity Used After | Diff | Ratio for Diff |

|---|---|---|---|---|

| COMPRESSION=YES | ||||

| 12.43 GB | ||||

| hmeij-rhel:/ 7.5 GB | 17.40 GB | 4.97 GB | 1.50x | |

| sw:~fstarr 7.9 GB | 17.40 GB | 27.00 GB | 9.60 GB | 0.83x |

| sw:/state 31 GB | 27.00 GB | 48.89 GB | 21.89 GB | 1.42x |

| hmeij-win 36.8 GB | 48.89 GB | 83.03 GB | 34.4 GB | 1.07x |

| COMPRESSION=NO | ||||

| 11.78 GB | ||||

| hmeij-rhel:/ 7.5 GB | 11.78 GB | 15.88 GB | 4.10 GB | 1.83x |

| hmeij-win+wwarner-mac 36.8+47.6 GB | 44.60 GB | 101.52 GB | 56.92 GB | 1.48x |

From Virtual Lib Page | Statistics Page

| Files | TSM Vol | TSM Reduction | TSM Ratio | Used | Data Written | VTL Ratio |

|---|---|---|---|---|---|---|

| COMPRESSION=YES | ||||||

| 169.86 MB | 156.50 MB | |||||

| hmeij-rhel:/ 7.5 GB | 4.02 GB | 41% | 1.87x | 5.13 GB | 4.79 GB | 1.56x |

| sw:~fstarr 7.9 GB | 8.73 GB | -13% | 0.90x | 14.73 GB | 14.23 GB | 0.93x |

| sw:/state 31 GB | 18.99 GB | 35% | 1.63x | 36.62 GB | 35.24 GB | 1.47x |

| hmeij-win 36.8 GB | 27.12 GB | 14% | 1.35x | 70.80 GB | 65.72 GB | 1.21x |

| COMPRESSION=NO | ||||||

| 86.50 MB | 175.50 MB | |||||

| hmeij-rhel:/ 7.5 GB | 6.85 GB | 0% | na | 4.18 GB | 7.65 GB | 1.83x |

| hmeij-win+wwarner-mac 36.8+47.6 GB | 31.42+45.49 GB | 0% | na | 89.82 GB | 131.19 GB | 1.01x |

Note: condor_home picked up the .snapshot dirs so bombed, /tmp for logging filled.

DXI VTL Test Scenario

- server

- we will set up a Dell Power Edge 1955 blade (2.6 gzh, dual-quad core xeon 5355 with 16 gb RAM footprint and 2×36 gb 15K rpm disks)

- install redhat AS v4 Linux, x86 32-bit

- install Linux based TSM server, perhaps v5.5 for potential vmware backup

- connect linux host to vtl device via fiber using the dell enclosure

- note: doing it this way does imply that the IO of TSM traffic will compete with the other blades in the enclosure.

- if this turns into a problem we will have to grab a desktop pc

- Matt Stoller appeared ok with this for testing, but production should be a standalone server (PE 2950?)

- tsm clients

- we will be creating a separate dsm.opt of existing desktop clients and in addition to regular backup schedule point the clients to the VTL for backup

- we will initially stagger the first backup events to avoid overloading parts of the network

- the goal is to request ITS employees to join in testing

- target is 100 clients in a representative distribution: 45% windows, 45% macs, 10% linux

Quantum DXi5500 with 10Tb addressable space concern

-

- we currently already have 50Tb data, when VTL in service, the virtual tapes will not be 100% utilized, so it is unrealistic to assume max storage capacity.

- disappointed with disabling client compression as this will put more data to our 10/100 network, so client backups might take longer to complete if network bandwidth is saturated.

Facts To Obtain

- total backup volume of first backup by clients (compare windows vs macs vs linux)

- deduplication rate over time as new clients perform a first backup

- total incremental backup volume by clients (compare windows vs macs vs linux)

- deduplication rate over time

- measure any throughput? probably not relevant

- estimate total volume of backup of production system (how to find the median value?)

| server | type | total mb | average mb | max mb |

|---|---|---|---|---|

| nighthawk | server data | 25,044,693 | 34,930 | 2,509,773 |

| blackhawk | desktop clients | 76,054,658 | 31,336 | 2,514,807 |

note … the average value of the desktops is heavily skewed as there is at least one very large clients (as in one faculty cluster)

| “desktop nodes” |

|---|

| N=1,180 |

| Min_MB=0 Max_MB=336,555 Avg_MB=22,949 Sum_MB=27,048,503 |

| N(less than 23,000 MB)=885 … 75% |

| “departmental nodes” |

| N=10 |

| Min_MB=359,354 Max_MB=3,189,296 Sum_MB=12,910,296 |

| “server data” |

|---|

| filer1 + filer2 = 5,034,000 MB |

| filer3 + filer 4 = 17,764,000 MB |

| (these are a bit off as LUNs are repsented as 100% used filespace) |

Total (uncompressed) Volume: 27tb + 13tb + 23tb = 63 tb

So, our desktops would at 2x use up 13.5/18 or 75% of the available disk space on the dxi barriing even thinking about the incremental volume.

SELECT NODE_NAME, FILESPACE_NAME, FILESPACE_TYPE, CAPACITY AS "File System Size in MB", PCT_UTIL, DECIMAL((CAPACITY * (PCT_UTIL / 100.0)), 10, 2) AS "MB of Active Files" FROM FILESPACES ORDER BY NODE_NAME, FILESPACE_NAME

- apply deduplication ratios and estimate total needed addressable space

Blackhawk Facts

Assessing TSM blackhawk makeup. If we left the large servers listed on the right behind on blackhawk (for now), the VTL front end host could have 2 instances of about 15 TB, one for macs, the other for windows clients. Roughly balanced, except for the database object counts which seems heavily skewed towards macs.

| FILESYSTEM STORAGE | |||

|---|---|---|---|

| TYPE | TALLY | SIZE | |

| linux | 6 | 5,738,155 | (2.2T vishnu, 3.2T scooby) |

| mac | 315 | 17,145,231 | (1.5T lo_studio, 1.6T communications) |

| sun | 2 | 16,287 | |

| windows | 829 | 19,321,974 | (0.8T video03, 1T gvothvideo04, 1.2T gvothlab2) |

| unknown | 44 | 0 | |

| TOTALS | 1,196 | 42,221,647 | |

| DB OBJECTS | |||

|---|---|---|---|

| TYPE | OBJECTS | ||

| linux | 15,296,322 | (10M vishnu, 3.2M scooby) | |

| mac | 127,061,815 | (0.5M lo_studio, 0.9M communications) | |

| sun | 148,361 | ||

| windows | 99,897,539 | (1M video03, 9.7M gvothvideo04, 7.9M gvothlab2) | |

| unknown | 0 | ||

| TOTALS | 242,404,037 | ||

Facts To Provide

To fill out the site survey, a few bits of information:

- Where will the DXi physically be located?

- Wesleyan University, Information Technology Services, Excley Science Center (Room 516, Data Center), 265 Church Street, Middletown, Connecticut 06459

- Who will be the primary contact (name/phone/email)?

- Administrative: Henk Meij, 860.685.3783, hmeij@wes

- Technical: Hong Zhu, 860.685.2542, hzhu@wes

- Are you directly connecting the fibre from the blade center to the DXI?

- Yes.

- How many virtual tape drives you plan to use in the DXI (up to 64)?

- If tape drive doesn't consume capacity, we would like to start with 50. What is Quantum's recommendation for production? As we like to backup clients directly to DXi during test period, i.e., no need to go to disk pool first. However, when in production, we would like clients go to diskpool then migrate to VTL as our nodes are beyond 64.

- Do you have a preferred virtual cartridge size in the DXI? (smaller cartridges tend to improve the granularity of restore - if you create 1TB cartridges, they are likely to be involved in a backup when you get a restore request, and you'll have to wait until the backup is done to get that cartridge; smaller cartridges limit this issue … for example configure 40GB cartridges).

- 40Gb

- For the test, do you plan to mount this in a data center rack cabinet?

- Yes. If installed in top of rack, we have enough persons to lift it in place.

- What threshold do you use for tape reclamation?

- 55% on physical L3584, any recommendation for VTL tapes?

Facts To Ponder

During the last 3 months we experienced 300GB to 1TB of incremental data backup with average at ~850Gb… (most of them are compressed).

Also, here goes our backup policy:

Desktop clients:

- Files currently on client file system: 2

- Files deleted from client file system: 1

- Number of days to retain inactive version: No limit

- Number of days to retain the deleted version: 60

Server clients, mainly two: 1)

- Files currently on client file system: 2

- Files deleted from client file system: 1

- Number of days to retain inactive version: 30

- Number of days to retain the deleted version: 60

2)

- Files currently on client file system: 3

- Files deleted from client file system: 2

- Number of days to retain inactive version: No limit

- Number of days to retain the deleted version: 121

Goals & Objectives

- evaluate compression and deduplication ratios of the unit given 2 scenarios: tsm clients send data to be backed up compressed and not compressed. the hope is to achieve 2x for compression and 10x for deduplication, yielding a 20x factor for the useable space using client compression or not.

- evaluate throughput using inline deduplication and asses if our backup schedules would/could shorten; perhaps assess post processing throughput since that is an available feature

- hopefully, 100 client desktops will participate (45% windows, 45% macs, 10% unix) yielding data that can be scaled out to assess if our target of 10TB usable space (200TB with a 20x factor) gives us room to grow with this unit for an anticipated time period of 3-6 years (this is less important now with the dxi7500)

- unit should be able to replicate to another unit (one to one or selectively) in a future DR site to be build

File Share Test

| (Session: 19, Node: EXCHANGEWES5) | ||

|---|---|---|

| 07/25/2008 16:35:23 | Data transfer time: | 18,490.95 sec |

| 07/25/2008 16:35:23 | Network data transfer rate: | 58,470.41 KB/sec |

| 07/25/2008 16:35:23 | Aggregate data transfer rate: | 10,825.66 KB/sec |

| 07/25/2008 16:35:23 | Objects compressed by: | 0% |

| 07/25/2008 16:35:23 | Subfile objects reduced by: | 0% |

| 07/25/2008 16:35:23 | Elapsed processing time: | 27:44:31 |

Quantum reports:

-------------------

Capacity: 17.81TB

Free: 15.81TB

Used: 2.00TB/11.21%

Date reduced by: 43.56%

TSM reports:

--------------

tsm: VTLTSM>q occ

Node Name Type Filespace FSID Storage Number of Physical Logical

Name Pool Name Files Space Space

Occupied Occupied

(MB) (MB)

---------- ---- ---------- ----- ---------- --------- --------- ---------

EXCHANGEW- Bkup \\monet\g$ 1 VTLPOOL 1,336,817 1,056,386 1,056,386

ES5 .79 .79

This was not so useful other than the throughput statistics. 1 TB of files were send over. Q7500 reports 2TB is used (presumably 1 TB of staged data, and 1 TB for block pool). Data was send uncompressed. Unfortunately the before/after reduction info was not grabbed from the screen. Recall something like 1.16x. That's compess + dedup !?

Uncompressed Data Stream With Desktops

| #1 Before We Started | |

|---|---|

| Capacity | 17.81 TB |

| Free | 17.56 TB |

| Used | 241.81 GB / 1.36% |

Is the above overhead? We did recycle the cartridges from previous data dump and initiated a space reclamation. Then recreated cartridges.

| #2 Next we did a full backup of these desktops, nodes sending uncompressed data | ||

|---|---|---|

| 2 | linux | 8 % |

| 18 | WinNT | 75 % |

| 4 | Mac | 16 % |

Not too realistic … we might be running close to 50/50 windows vs macs in the field.

| #3 Results, first full desktop backups | Data Reduction Performance |

|---|---|

| Before reduction | 885.06 GB |

| After reduction | 426.03 GB |

| Reduction | 2.08x |

The q7500 received 885GB of data and deduped/compressed that down to 426GB. Hmm…that looks suspiciously like compression only.

| #4 TSM Server Reports |

|---|

| 836,942 physical MB |

| 5,215,860 files |

Next we re-submitted the desktops above. Initially the nodes were called username-vtl and now we renamed them username-vtl2 and inititated a full backup. So if the numbers hold, and very changes occur on these desktops within this week, then we expect:

| #5 | 1st backup | 2nd Expect | 2nd Observed | % diff |

|---|---|---|---|---|

| Before reduction | 885.06 GB | 1,750 GB | 1,640 GB | + 88 % |

| After reduction | 426.03 GB | 450 GB | 479.59 GB | + 12.7 % |

| Reduction | 2.08x | 4x | 3.44x | + 65 % |

We did not manage to send all nodes again (2 linux, 17 windows, 3 mac … 1 large mac desktop missing). The block pool grew by roughly 10% (new unique blocks) while resending 88% of the original data. So that means the dedup is working. The key here is that this may represent nightly incremental backups (as in backing up files that changed and have already been processed by the VTL at the block level). So 1640-885= 775 GB flowed to VTL in 2nd backup which added 479-426= 53.56 GB to the pattern pool which generates a factor of 1:14 … clearly working.

The baseline of 2x across this mix of desktops is surprising.

| #6 TSM Server Reports | % diff with 1st backup |

|---|---|

| 646,858 physical MB | - 22.7 % |

| 4,558,647 files | - 12.6 % |

Side bar: if this were compression only, then the 1.7 GB volume would yield an “After” value of 850MB assuming a 50% (2x) reduction which is close to what TSM achieves.

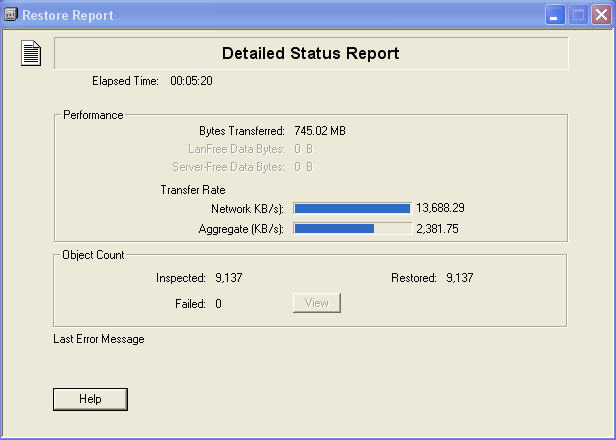

Restore to Desktop

Compressed vs Uncompressed Data Stream With Single Desktop

| #9 | #9A Data Reduction Performance | #9B Data Reduction Performance | #9C DIFFERENCE |

|---|---|---|---|

| Before reduction | 29.31 GB | 63.30 GB | +115% |

| After reduction | 29.31 GB | 46.25 GB | +58% |

| Reduction | 1.00x | 1.37x | |

| Tivoli Desktop Client Reports | COMPRESSED | UNCOMPRESSED | |

| Total number of objects inspected | 226,641 | 227,004 | |

| Total number of objects backed up | 224,449 | 224,808 | |

| Total number of objects updated | 0 | 0 | |

| Total number of objects rebound: | 0 | 0 | |

| Total number of objects deleted | 0 | 0 | |

| Total number of objects expired | 0 | 0 | |

| Total number of objects failed: | 23 | 23 | |

| Total number of bytes transferred | 25.74 GB | 30.46 | +18% |

| Data transfer time: | 472.22 sec | 2,594.15 sec | +450% |

| Network data transfer rate | 57,163.84 KB/sec | 12,315.60 KB/sec | |

| Aggregate data transfer rate | 2,094.80 KB/sec | 2,538.40 KB/sec | |

| Objects compressed by | 16% | 0% | |

| Elapsed processing time | 03:34:46 | 03:29:46 | about same! |

So sending compressed client data over is a nono. When sending the same client data uncompressed we a compress/dedup positive effect 1.38x over 1x. Backup time is about the same, so the only caveat is sending too much uncompressed data over the network for first backups. Incremental backup volume should be ok.

Exporting Existing Nodes

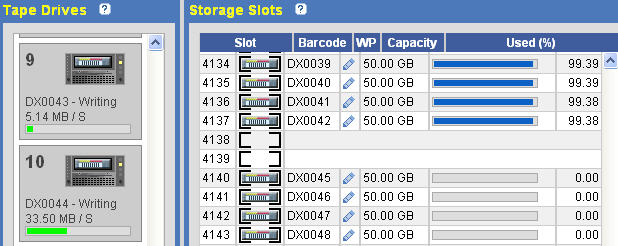

200 cartidges of LTO-3 type with custom size of 50 GB.

In this effort we're trying to export existig nodes from “blackhawk” to out “vtltsm” servers. The idea being that when a node is exported and imported we have both the original data and the inactive/deleted data for a node. That would represent the real world.

We still do not know the rough volume of original data versus inactive/deleted data volume.

| Before Media Creation | |

|---|---|

| Data Reduction Performance | |

| Before reduction | 204.88 MB |

| After reduction | 204.88 MB |

| Reduction | 1.00x |

| Data Reduced By … | 0% |

- Source = size of node file space on TSM server “blackhawk”

- Cum. Source = sum of source, total size of the source nodes

- Target = estimated by taking previous before reduction and current before reduction

- Cum. Target = sum of target, total size of target nodes (before reduction)

| EXPORT NODE | Type | Source Node | Sum(Source) | Target Node | Sum(Target) | Before Reduction | After Reduction | Reduced By |

|---|---|---|---|---|---|---|---|---|

| 204.88 MB | 204.88 MB | 1.00 | ||||||

| JJACOBSEN04 | Mac | 24,750 M |

Aborted … the export/import fails between the TSM instances … since hong is out, i'm pretty unable to fix this.

Large Filesystems

200 cartridges of LTO-3 type with custom size of 50 GB.

In order to get a clearer picture of the results of compression *and* deduplication of *first backups*, we're tackling some filesystems with different content. We'll back these serially and keep track of the progress as we compile the final statistic.

| After Media Creation | |

|---|---|

| Data Reduction Performance | |

| Before reduction | 204.88 MB |

| After reduction | 204.88 MB |

| Reduction | 1.00x |

| Data Reduced By … | 0% |

The filesystems: (<hi #ffff00>Note: dragon's home and data are backed up via node miscnode, cluster is backed up via node miscnode2 … these 3 were initiated simultaneously on sunday 8/17 AM</hi>) … i submitted miscnode twice and send up data first, edited dsm.sys, and send up home. that appears to not trigger the use of another drive (becuase of same node??). created another node and send up cluster. now we do see 2 drives being used.

tsm: VTLTSM>q sess

Sess Comm. Sess Wait Bytes Bytes Sess Platform Client Name

Number Method State Time Sent Recvd Type

------ ------ ------ ------ ------- ------- ----- -------- --------------------

59 Tcp/Ip IdleW 7.0 M 960 677 Node Linux86 MISCNODE

60 Tcp/Ip RecvW 0 S 4.4 K 9.1 G Node Linux86 MISCNODE

61 Tcp/Ip IdleW 15 S 183.5 K 829 Node Linux86 MISCNODE

62 Tcp/Ip RecvW 0 S 41.0 K 8.6 G Node Linux86 MISCNODE

69 Tcp/Ip IdleW 42 S 898 674 Node Linux86 MISCNODE2

70 Tcp/Ip Run 0 S 1.2 K 38.9 G Node Linux86 MISCNODE2

72 Tcp/Ip Run 0 S 126 163 Admin Linux86 ADMIN

| Tivoli Desktop Client Reports | digitization | openmedia | media | streaming | cluster |

|---|---|---|---|---|---|

| Total number of objects inspected | 1,492 | 114,314 | 287,475 | 18,950 | 9,155,715 |

| Total number of objects backed up | 1,492 | 114,314 | 287,321 | 18,950 | 9,153,875 |

| Total number of objects updated | 0 | 0 | 0 | 0 | 0 |

| Total number of objects rebound: | 0 | 0 | 0 | 0 | 0 |

| Total number of objects deleted | 0 | 0 | 0 | 0 | 0 |

| Total number of objects expired | 0 | 0 | 0 | 0 | 0 |

| Total number of objects failed: | 23 | 2 | 153 | 1 | 192 |

| Total number of bytes transferred | 201.16 GB | 226.89 GB | 691.03 GB | 959.17 GB | 2.58 TB |

| Data transfer time: | 472.22 sec | 1,376.50 sec | 3,729.63 sec | 6,758.95 sec | 19,025.81 sec |

| Network data transfer rate | 100,161.52 KB/sec | 172,842.57 KB/sec | 194,282.04 KB/sec | 148,805.54 KB/sec | 145,994.69 KB/sec |

| Aggregate data transfer rate | 28,000.47 KB/sec | 23,246.48 KB/sec | 20,776.25 KB/sec | 29,839.97 KB/sec | 18,600.71 KB/sec |

| Objects compressed by | 0% | 0% | 0% | 0% | 0% |

| Elapsed processing time | 02:05:33 | 02:50:34 | 09:41:16 | 09:21:45 | 41:28:51 |

| FILESYSTEM | TSM send | sum(TSM send) | Before Reduction | After Reduction | Reduced By | Data Reduced By |

|---|---|---|---|---|---|---|

| 204.88 MB | 204.88 MB | 1.00 | 0% | |||

| /mnt/digitization | 201.16 GB | 201.16 GB | 222.25 GB | 214.94 GB | 1.03x | 3.29% |

| /mnt/openmedia | 226.89 GB | 429.78 GB | 478.68 GB | 400.06 GB | 1.20x | 5.99% |

| /mnt/media | 691.03 GB | 1120.81 GB | 1.25 TB | 930.88 GB | 1.34x | 25.42% |

| /mnt/streaming | 959.17 GB | 2079.88 GB | 2.35 TB | 1.66 TB | 1.41x | 29.13% |

| dragon+cluster | sun 10am | 2.35 TB | 1.66 TB | 1.41x | 29.13% | |

| dragon+cluster | sun 04pm | 2.70 TB | 1.88 TB | 1.44x | 30.09% | |

| dragon+cluster | mon 09am | 4.72 TB | 3.21 TB | 1.47x | 32.02% | |

| dragon+cluster | mon 05pm | 5.35 TB | 3.51 TB | 1.52x | 34.18% | |

| dragon+cluster | (partially) tue 09am | 6.10 TB | 3.90 TB | 1.56x | 36.05% | |

| FILESYSTEM | Quick Count of Filename Extensions |

|---|---|

| /mnt/digitization | 850 .tif 68 .aif 44 .VOB 44 .dv 32 .IFO 32 .BUP 30 .db 29 .mov 28 .txt 18 .pct 8 .rcl 8 .jpg 6 .psd 6 .LAY 4 .webloc 4 .par 4 .m2v 4 .aiff 2 .fcp 1 .volinfo 1 .csv |

| /mnt/openmedia | … |

| /mnt/media | 141,326 .jpg 12,044 .mp3 11,339 .hml 1,953 .gif 1,740 .JPEG 1,450 .pdf 1,408 .Wav 175 .mov_wrf 175 .mov_oldrf 140 .mpg 139 .movw 139 .mov 138 .swf 116 .MG 116 .bmp 112 .css 100 .PDF 19 .jp 16 .propris 16 .class 14 .AV 14 .dcr 14 .asx 13 .XL 13 .mo 12 .wma 12 .rf 12 .old 12 .fla 12 .f 12 .DEF 12 .BAT |

| /mnt/streaming |

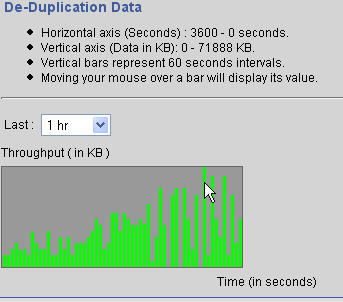

| Vertical axis (Data in KB): | |

|---|---|

| 0-34995 digitization |  |

| 0-48059 openmedia |  |

| 0-52428 media | … 9 hrs, too long to catch … |

This may be useful info later on …

tsm: VTLTSM>q db

Available Assigned Maximum Maximum Page Total Used Pct Max.

Space Capacity Extension Reduction Size Usable Pages Util Pct

(MB) (MB) (MB) (MB) (bytes) Pages Util

--------- -------- --------- --------- ------- --------- --------- ----- -----

13,328 12,000 1,328 5,448 4,096 3,072,000 1,677,880 54.6 54.6

tsm: VTLTSM>q filespace

Node Name Filespace FSID Platform Filespace Is Files- Capacity Pct

Name Type pace (MB) Util

Unicode?

--------------- ----------- ---- -------- --------- --------- ----------- -----

MISCNODE /mnt/digit- 2 Linux86 NFS No 277,181.6 78.5

ization

MISCNODE /mnt/openm- 3 Linux86 NFS No 352,776.6 71.2

edia

MISCNODE /mnt/media 4 Linux86 NFS No 774,092.6 95.8

MISCNODE /mnt/strea- 5 Linux86 NFS No 1,209,519.4 85.4

ming

MISCNODE /dragon_da- 6 Linux86 UNKNOWN No 1,433,605.1 97.9

ta

MISCNODE /dragon_ho- 7 Linux86 UNKNOWN No 1,228,964.6 98.3

me

MISCNODE2 /mnt/clust- 1 Linux86 NFS No 5,242,880.0 70.7

er

Final Notes

Notes on the evaluation activities are listed below. Trying to cross reference what we stated at the beginning of this page. We got nowhere near the 100 desktop client participation, more like 24. Turned out to be a lot of leg work visiting employees desktops. The VLAN location prevented us from using labs as desktop clients (although they also do not represent our clients as there is no local data on these desktops).

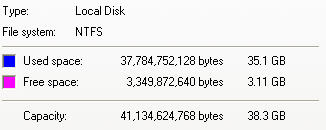

We configure cartridge media type to be LTO-3 with a custom size of 50 GB. We assigned 20 of our 40 drives to this single partition holding the media.

- Positives

- DXi7500 rack unit installation went smoothly barring some network complexities establishing connectivity

- Support is responsive

- Backups to the unit are fast from the desktops

- Restores are really fast, although this may be the best performance we see until we hit the 70% threshold, although with 40 drives total it probably will still be much better than what we currently experience

- Negatives

- many bugs (known and unknown ones) were quickly found

- tapes got stuck in “virtual mount” state while tsm showed no mounted tapes; a reboot is the only way to fix that (although partition unload/load might do it too) … to be fixed in upcoming firmware.

- upon one of two reboots to get rid of virtual tape mount problems, the unit “hung” overnight and engineer needed to be called in

- unit also spontaneously rebooted twice by itself; one of those times apparently was triggered by a fiber disconnnect at circa 11pm 8/7 when nobody was in the data center (although mice and ghosts are not ruled out), cable reconnected in 4 mins !?

Our biggest disappointment is the compress/dedup ratios. Some of the above tests do point out that we probably can achieve ratios greater than 1:10 looking at the nightly incremental data stream only; that is the backup of files by TSM that have already been backed up.

But my biggest concern is new data. Hard disks are now 250GB (instead fo 40 or 80GB), email quotas go from 100MB to 2GB, more pictures, movies, digital books, pdfs, you name it. My unquantified impression is that new data is the significant growth factor, not modified files. So … we really need to establish a dent in the first backup data stream to get ahead. If compress+dedup is no better than compress only, perhaps our solution should focus on “Massive Array of Idle Disks” (MAID technology).

TSM 2 TSM

We start with …

| Data Reduction Performance | |

|---|---|

| Before reduction | 0.00 KB |

| After reduction | 0.00 KB |

| Reduction | 0.00x |

And create one cartridge …

| Selected Create Media Settings | |

|---|---|

| Partition Name | q7500dedupe |

| Media Type | LTO-3 |

| Media Capacity | 5 |

| Number of Media | 1 |

| Starting Barcode: | DX0001 |

| Initial Location | Storage Slot |

Now we have … (i always wanted to know the overhead … added another 99 5 gb cartridges)

| Data Reduction Performance | |

|---|---|

| Before reduction | 1.02 MB |

| After reduction | 1.02 MB |

| Reduction | 1.00x |

Finally, reset TSM db and checkin library …

[root@miscellaneous bin]# ./dsmserv restore db todate=today VTLTSM>checkin libv q7500 search=yes checklabel=bar status=scratch

Good to go. Export/Import nodes (sizes in MB) …

TSM / DXI reports …

| Node | Type | TSM source | TSM Sum | Before | After | Reduced By | Reduction |

|---|---|---|---|---|---|---|---|

| JJACOBSEN04 | Mac | 24,750 | 24,750 | 30.78 GB | 30.78 GB | 1.00x | 0% |

| (again per Chris) JJACOBSEN04 | Mac | 24,750 | 49,500 | 61.47 GB | 43.91 GB | 1.40x | 28.56% |

| JHOGGARD | Mac | 18,588 | 68,088 | 89.64 GB | 54.92 GB | 1.63x | 38.73% |

Chris requested the re-export of this node. This in essence is a full backup again. The results are the same … around 1.5x for a full backup. We see nothing in terms of the dedup performance of the inactive/delete file space withing the first backup. This node is uncompressed.

DXI repeorts …

| Slot | Barcode | WP | Capacity | Used (%) |

|---|---|---|---|---|

| 4096 | Storage Slots4096 DX0001 | Write Enabled | 5.00 GB | 99.14 |

| 4097 | Storage Slots4097 DX0002 | Write Enabled | 5.00 GB | 99.12 |

| 4098 | Storage Slots4098 DX0003 | Write Enabled | 5.00 GB | 99.09 |

| 4099 | Storage Slots4099 DX0004 | Write Enabled | 5.00 GB | 99.09 |

| 4100 | Storage Slots4100 DX0005 | Write Enabled | 5.00 GB | 6.34 |

DXI VTL Stats

It appears the GUI is not reporting correct info. So scripts were installed and we did some small tests. Found that compression is flat and dedup may run in the order of 1:4 to 1:6 backing up singular instances of c:\windows\systems32 and c:\temp_old … that is a wild mix of windows files and the results are interesting.

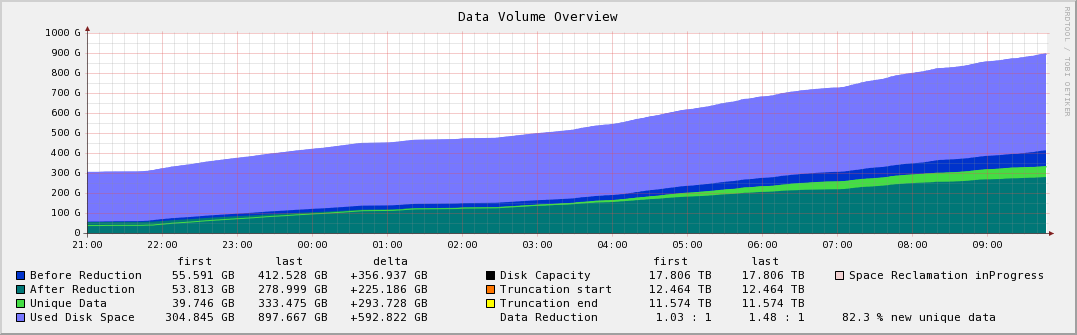

So Chris asked me to back up some large file systems. Here is our file share, both for students and departments. Roughly 2.5 TB of windows and mac files of all kinds. We call these fie systems dragon_home and dragon_data. They are backed up in sequence, home first then data.

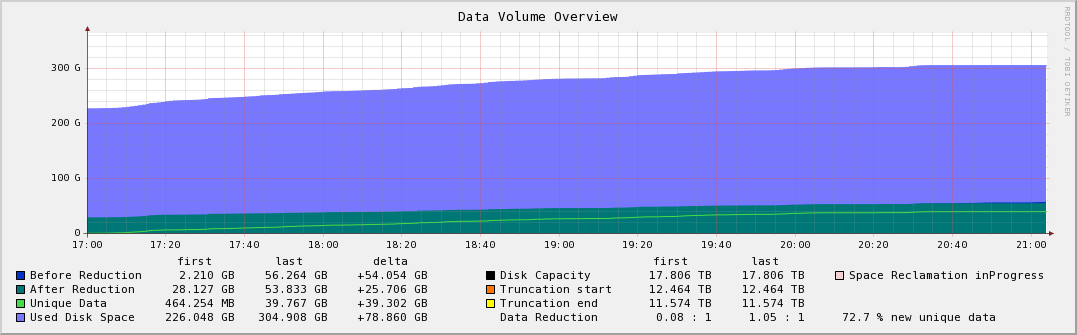

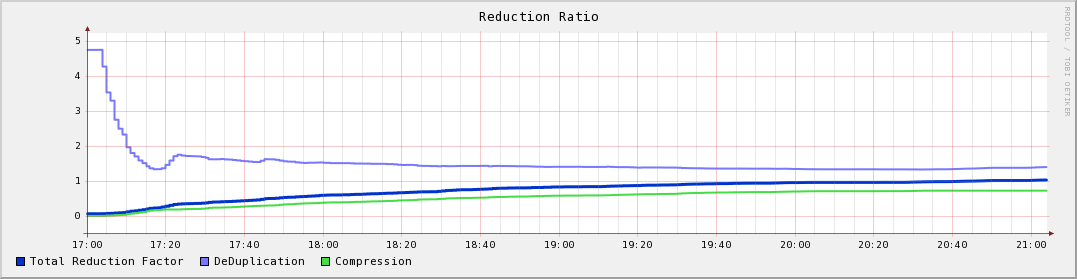

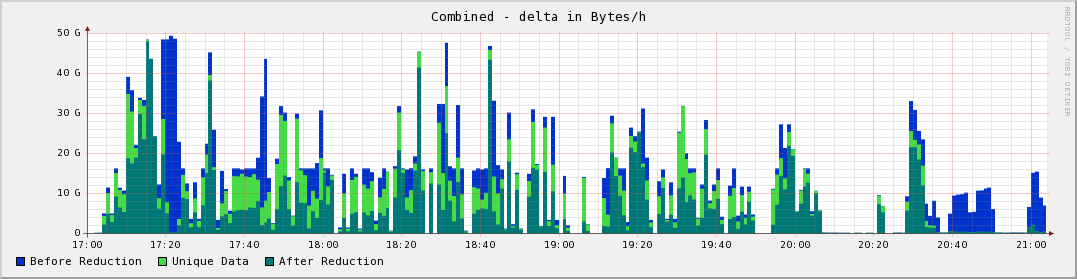

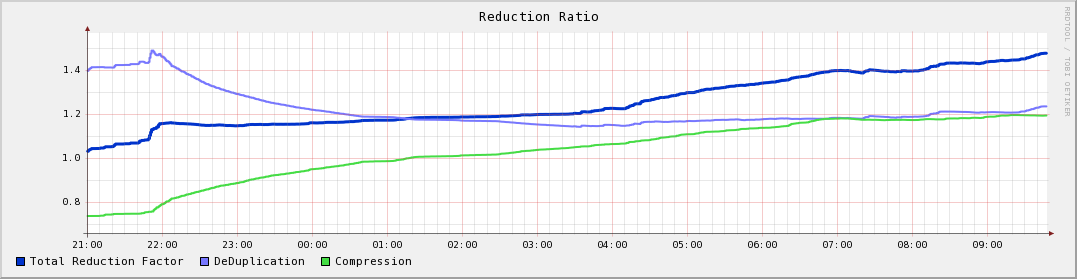

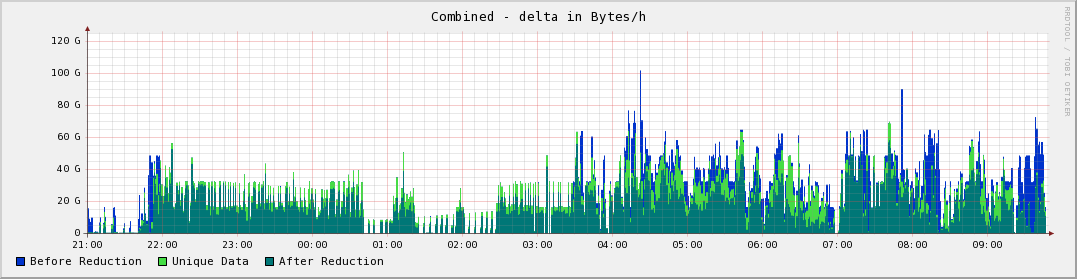

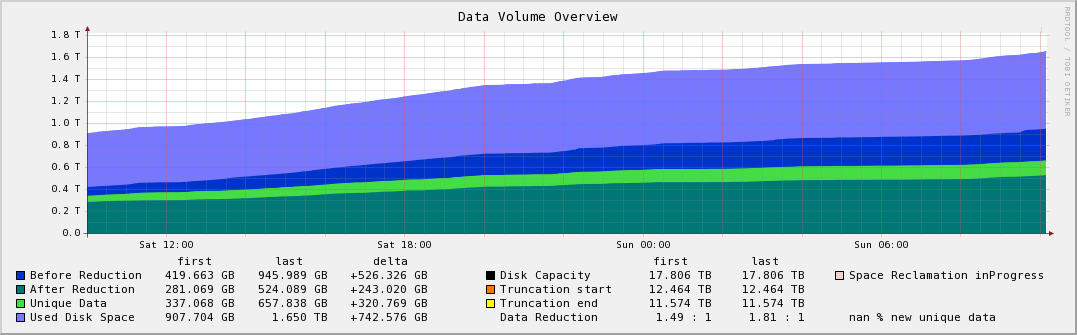

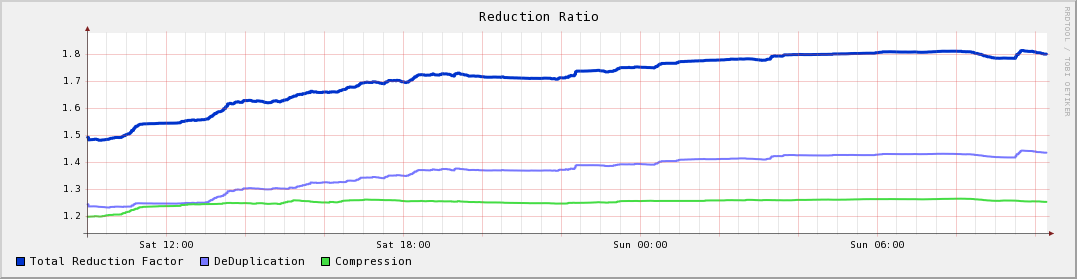

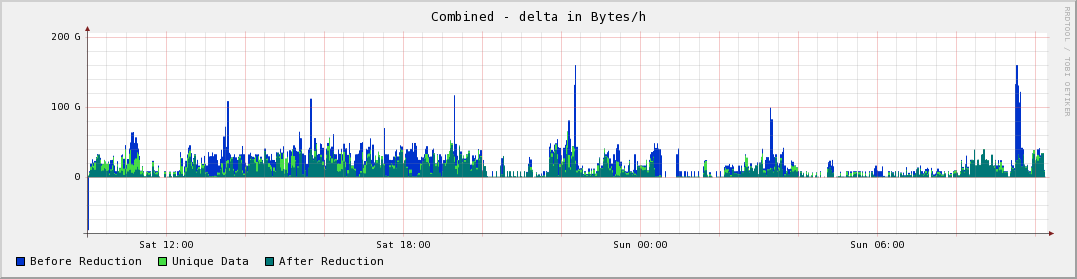

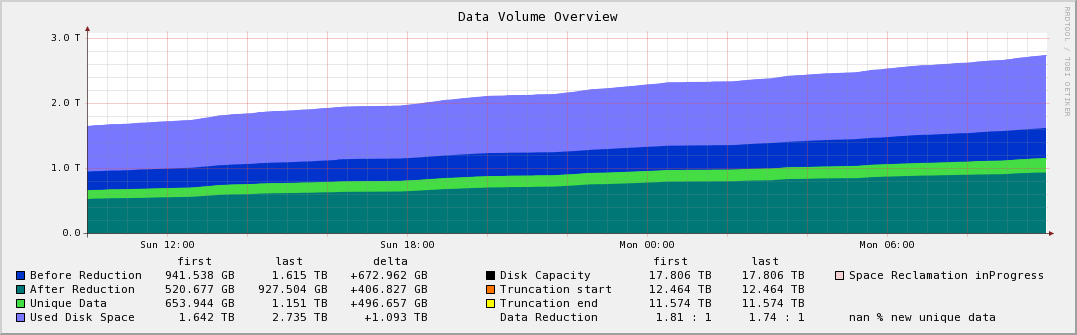

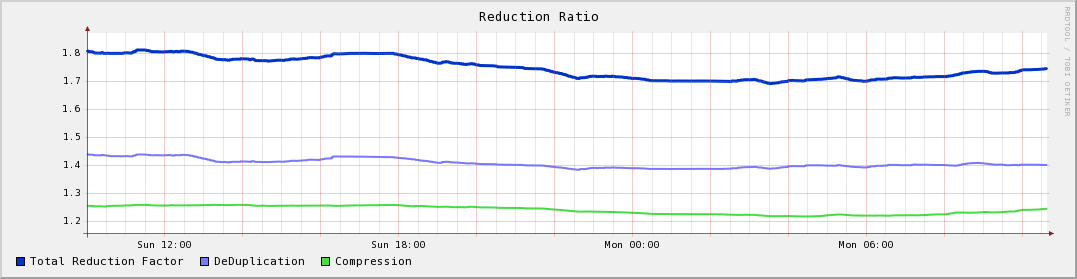

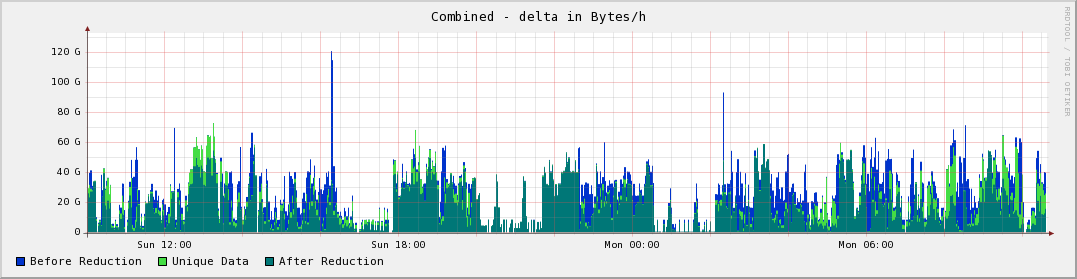

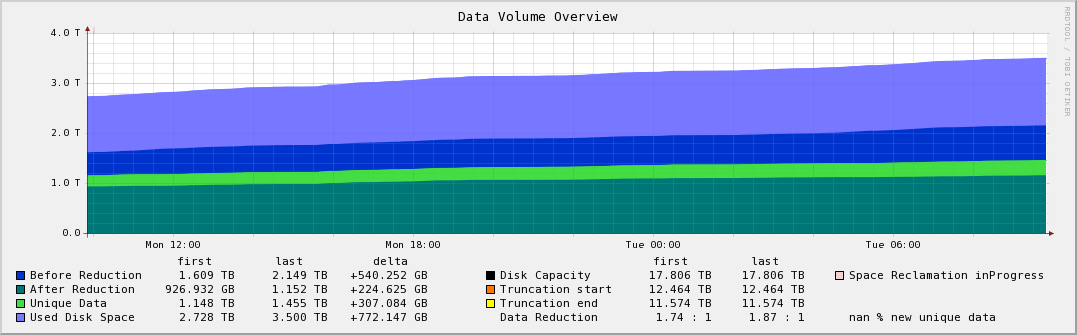

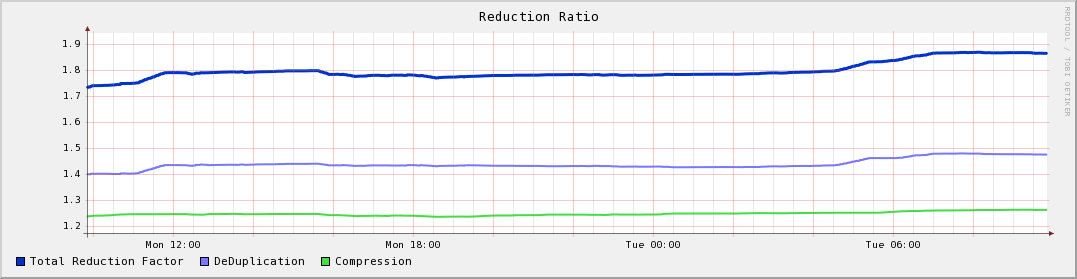

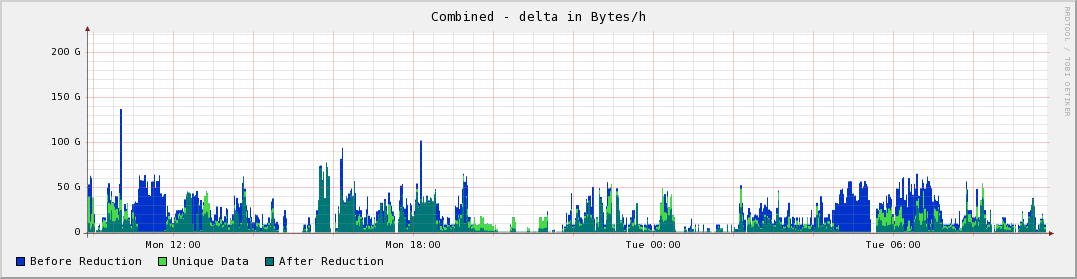

Presented graphs (3hrs, 15 hrs then in 24 hour increments). When done all graphs in the “Full Overview” menu. Hover over the images to get the ImageName_DateTime information.

Media: 60 LTO-3 50 GB Cartriges and 20 drives (only one used at any point in time)